Difference between revisions of "Theory Notes"

(→Problem) |

(→Matrix Elements of H) |

||

| (106 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<center> | <center> | ||

| − | <H1><p>Notes on solving the Schrödinger equation in Hylleraas coordinates for heliumlike atoms</p> | + | <H1><p>Notes on solving the Schrödinger equation in Hylleraas coordinates</p><p> for heliumlike atoms</p> |

<p style="font-size: 75%;">Gordon W.F. Drake</p> | <p style="font-size: 75%;">Gordon W.F. Drake</p> | ||

<p style="font-size: 75%;">Department of Physics, University of Windsor</p> | <p style="font-size: 75%;">Department of Physics, University of Windsor</p> | ||

<p style="font-size: 75%;">Windsor, Ontario, Canada N9B 3P4</p> | <p style="font-size: 75%;">Windsor, Ontario, Canada N9B 3P4</p> | ||

| − | <p style="font-size: 50%; text-align:left">(Transcribed from hand-written notes and edited by Lauren Moffatt. Last revised | + | <p style="font-size: 50%; text-align:left">(Transcribed from hand-written notes and edited by Lauren Moffatt. Last revised November 22, 2017 to correct a missing subscript in the expression for $B_1$ in the Hamiltonian in Sect. 11 General Hermitian Property.)</p> |

</H1> | </H1> | ||

</center> | </center> | ||

| Line 28: | Line 28: | ||

But <math>\begin{eqnarray} \frac{\hbar^2}{m}(\frac{me^2}{\hbar^2})^2 = \frac{e^2}{a_0} \end{eqnarray} </math> is the hartree atomic unit of of energy <math>(E_h = 27.211\,385\,05(60)</math> eV, or equivalently <math>E_h/(hc) = 2194.746\,313\,708(11)</math> m<math>^{-1})</math>. Therefore, after multiplying through by <math>a_0/(Ze)^2</math>, the problem to be solved in Z-scaled dimensionless units becomes | But <math>\begin{eqnarray} \frac{\hbar^2}{m}(\frac{me^2}{\hbar^2})^2 = \frac{e^2}{a_0} \end{eqnarray} </math> is the hartree atomic unit of of energy <math>(E_h = 27.211\,385\,05(60)</math> eV, or equivalently <math>E_h/(hc) = 2194.746\,313\,708(11)</math> m<math>^{-1})</math>. Therefore, after multiplying through by <math>a_0/(Ze)^2</math>, the problem to be solved in Z-scaled dimensionless units becomes | ||

| − | \begin{equation} [-\frac{1}{2}(\nabla^2_{\rho_1}+\nabla^2_{\rho_2}) - | + | \begin{equation} \left[-\frac{1}{2}(\nabla^2_{\rho_1}+\nabla^2_{\rho_2}) - |

| − | \frac{1}{\rho_1} - \frac{1}{\rho_2} + \frac{Z^{-1}}{\rho_{12}}]\psi = | + | \frac{1}{\rho_1} - \frac{1}{\rho_2} + \frac{Z^{-1}}{\rho_{12}}\right]\psi = |

\varepsilon\psi\nonumber \end{equation} | \varepsilon\psi\nonumber \end{equation} | ||

| Line 47: | Line 47: | ||

\psi({\bf r}_1,{\bf r}_2) = \frac{1}{\sqrt{2}} \left[ u_1(r_1) u_2(r_2) \pm u_2(r_1) u_1(r_2) \right] \nonumber | \psi({\bf r}_1,{\bf r}_2) = \frac{1}{\sqrt{2}} \left[ u_1(r_1) u_2(r_2) \pm u_2(r_1) u_1(r_2) \right] \nonumber | ||

\end{equation} | \end{equation} | ||

| − | for the <math>1s^2\ ^1\!S</math> ground state. Because of the | + | for the <math>1s^2\ ^1\!S</math> ground state. Because of the <math>\frac{1}{r_{12}}</math> term in the Schrödinger equation, the exact solution connot be expressed in this form as a separable product. However, the Hartree-Fock approximation corresponds to finding the best possible solution to the Schrödinger equation |

\begin{equation} | \begin{equation} | ||

H\psi({\bf r}_1,{\bf r}_2) = E\psi({\bf r}_1,{\bf r}_2)\nonumber | H\psi({\bf r}_1,{\bf r}_2) = E\psi({\bf r}_1,{\bf r}_2)\nonumber | ||

| Line 118: | Line 118: | ||

Expand | Expand | ||

\begin{equation} | \begin{equation} | ||

| − | \psi({\bf r}_1,{\bf r}_2)= C_0u^{(s)}_1(r_1)u^{(s)}_1(r_2) + C_1u^{(P)}_1({\bf r}_1)u^{(P)}_1({\bf r}_2){\cal Y}^0_{1,1,0}(\hat{\bf r}_1, \hat{\bf r}_2)+C_2u^{(d)}_1({\bf r}_1)u^{(d)}_2({\bf r}_2){\cal Y}^0_{2,2,0}(\hat{\bf r}_1, \hat{\bf r}_2)+... \pm exchange | + | \psi({\bf r}_1,{\bf r}_2)= C_0u^{(s)}_1(r_1)u^{(s)}_1(r_2) + C_1u^{(P)}_1({\bf r}_1)u^{(P)}_1({\bf r}_2){\cal Y}^0_{1,1,0}(\hat{\bf r}_1, \hat{\bf r}_2)+C_2u^{(d)}_1({\bf r}_1)u^{(d)}_2({\bf r}_2){\cal Y}^0_{2,2,0}(\hat{\bf r}_1, \hat{\bf r}_2)+... \pm {\rm\ exchange} |

\end{equation} | \end{equation} | ||

where | where | ||

| Line 159: | Line 159: | ||

r_{12}^2 = r_1^2 + r_2^2 - 2r_1r_2\cos\theta_{12} | r_{12}^2 = r_1^2 + r_2^2 - 2r_1r_2\cos\theta_{12} | ||

\end{equation} | \end{equation} | ||

| − | and | + | and, from the spherical harmonic addition theorem, |

\begin{equation} | \begin{equation} | ||

\cos(\theta_{12})=\frac{4\pi}{3}\sum^1_{m=-1}Y^{m*}_1(\theta_1,\varphi_1)Y^m_1(\theta_2,\varphi_2) | \cos(\theta_{12})=\frac{4\pi}{3}\sum^1_{m=-1}Y^{m*}_1(\theta_1,\varphi_1)Y^m_1(\theta_2,\varphi_2) | ||

| Line 165: | Line 165: | ||

Consider first the S-states. The <math>r_{12}^0</math> terms are like the ss terms in a CI calculation. The | Consider first the S-states. The <math>r_{12}^0</math> terms are like the ss terms in a CI calculation. The | ||

<math>r_{12}^2</math> terms bring in p-p type contributions, and the higher powers bring | <math>r_{12}^2</math> terms bring in p-p type contributions, and the higher powers bring | ||

| − | in d-d, f-f etc type terms. In general | + | in d-d, f-f etc. type terms. In general |

\begin{equation} | \begin{equation} | ||

| Line 195: | Line 195: | ||

\Psi(r_2,r_2) = \sum c_{ijk}r_1^ir_2^{j+2}r_{12}^ke^{-\alpha r_1-\beta | \Psi(r_2,r_2) = \sum c_{ijk}r_1^ir_2^{j+2}r_{12}^ke^{-\alpha r_1-\beta | ||

r_2}\mathcal{Y}^M_{022}(\hat{r}_1,\hat{r}_2) | r_2}\mathcal{Y}^M_{022}(\hat{r}_1,\hat{r}_2) | ||

| − | +\sum d_{ijk}r_1^{i+1}r_2^{j+1}r_{12}e^{-\alpha^\prime r_1 - \beta^\prime | + | +\sum d_{ijk}r_1^{i+1}r_2^{j+1}r_{12^k}e^{-\alpha^\prime r_1 - \beta^\prime |

r_2}\mathcal{Y}^M_{112}(\hat{r}_1,\hat{r}_2)\nonumber | r_2}\mathcal{Y}^M_{112}(\hat{r}_1,\hat{r}_2)\nonumber | ||

\end{equation} | \end{equation} | ||

| − | For F-states, one would need | + | For F-states, one would need <math>(sf)F</math> and <math>(pd)F</math> terms. |

| − | For G-states, one would need | + | For G-states, one would need <math>(sg)G</math>, <math>(pf)G</math> and <math>(dd^\prime)G</math> terms. |

Completeness of the radial functions can be proven by considering the | Completeness of the radial functions can be proven by considering the | ||

| Line 217: | Line 217: | ||

\end{equation} | \end{equation} | ||

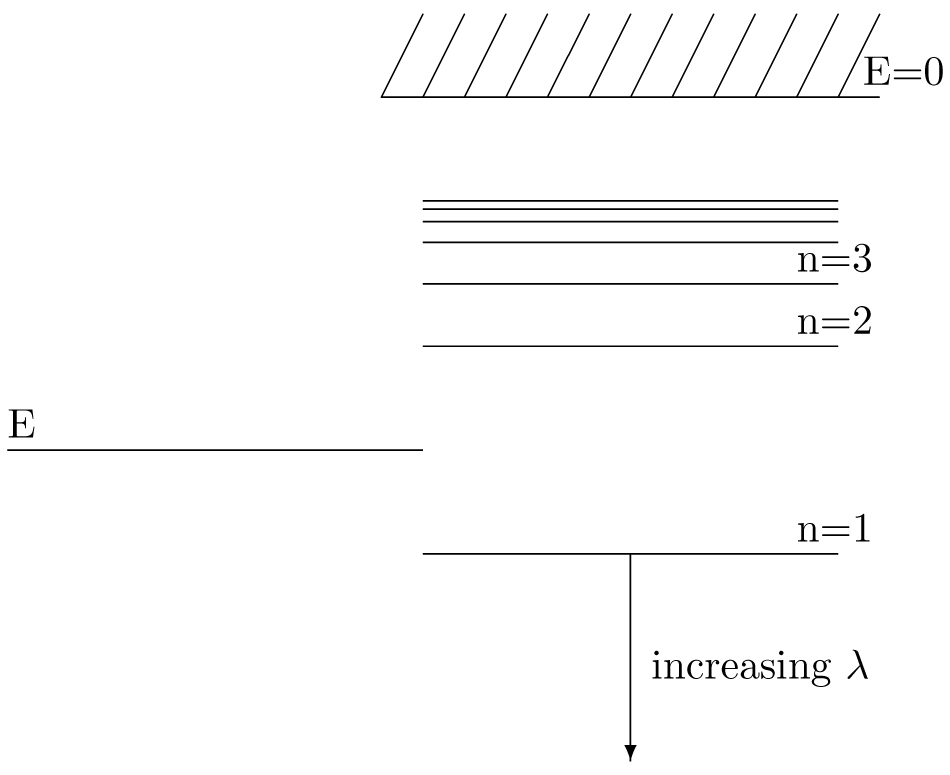

| − | For fixed E and variable | + | For fixed E and variable <math>\lambda</math> (nuclear charge). |

| − | The eigenvalues are | + | The eigenvalues are <math>\lambda_n = (E/E_n)^{1/2}</math>, where <math>E_n =- \frac{1}{2n^2}</math> |

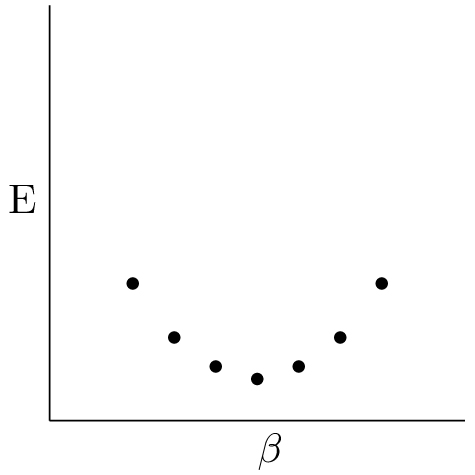

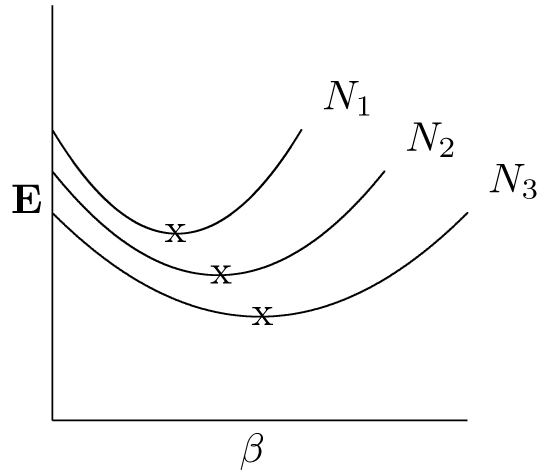

[[File:Pic2.jpg|500px]] | [[File:Pic2.jpg|500px]] | ||

| Line 249: | Line 249: | ||

completeness of the variational basis set. | completeness of the variational basis set. | ||

| − | [See also B Klahn and W.A. Bingel Theo. Chim. Acta (Berlin) < | + | [See also B Klahn and W.A. Bingel Theo. Chim. Acta (Berlin) <strong>44</strong>, 9 and 27 |

(1977)]. | (1977)]. | ||

| Line 257: | Line 257: | ||

| − | \ | + | \[ |

\Psi({\bf r}_1,{\bf r}_2) = \sum^N_{m=1}c_m\varphi_m\nonumber | \Psi({\bf r}_1,{\bf r}_2) = \sum^N_{m=1}c_m\varphi_m\nonumber | ||

| − | \ | + | \] |

| − | where | + | where <math>m</math> represents the <math>m</math>'th combination of <math>i,j,k</math> and |

\begin{equation} | \begin{equation} | ||

\varphi_{ijk}=r_1^ir_2^jr_{12}^ke^{-\alpha r_1 - \beta | \varphi_{ijk}=r_1^ir_2^jr_{12}^ke^{-\alpha r_1 - \beta | ||

| − | r_2}\mathcal{Y}^M_{l_1,l_2,L}(\hat{r}_1,\hat{r}_2) \pm exchange\nonumber | + | r_2}\mathcal{Y}^M_{l_1,l_2,L}(\hat{r}_1,\hat{r}_2) \pm \mbox{exchange}\nonumber |

\end{equation} | \end{equation} | ||

| Line 284: | Line 284: | ||

\sin(\theta) & \cos(\theta) | \sin(\theta) & \cos(\theta) | ||

\end{array} \right) | \end{array} \right) | ||

| − | \\ | + | \\[5pt] |

= & \left(\begin{array}{lr} | = & \left(\begin{array}{lr} | ||

cH_{11}+sH_{12} & cH_{12} + sH_{22}\\ | cH_{11}+sH_{12} & cH_{12} + sH_{22}\\ | ||

| Line 293: | Line 293: | ||

s & c | s & c | ||

\end{array}\right) | \end{array}\right) | ||

| − | \\ | + | \\[5pt] |

| − | |||

= & \left(\begin{array}{lr} | = & \left(\begin{array}{lr} | ||

c^2H_{11}+s^2H_{22} + 2csH_{12} & (c^2-s^2)H_{12}+cs(H_{22}-H_{11})\\ | c^2H_{11}+s^2H_{22} + 2csH_{12} & (c^2-s^2)H_{12}+cs(H_{22}-H_{11})\\ | ||

| Line 306: | Line 305: | ||

\begin{equation} | \begin{equation} | ||

| − | + | \left[\cos^2(\theta)-\sin^2(\theta)\right]H_{12} = | |

\cos(\theta)\sin(\theta)(H_{11}-H_{22})\nonumber | \cos(\theta)\sin(\theta)(H_{11}-H_{22})\nonumber | ||

\end{equation} | \end{equation} | ||

| Line 323: | Line 322: | ||

\begin{equation} | \begin{equation} | ||

| − | \sin(\theta)=-sgn(H_{12})\left(\frac{r-\omega}{2r}\right)^{1/2}\nonumber | + | \sin(\theta)=-\mbox{sgn}(H_{12})\left(\frac{r-\omega}{2r}\right)^{1/2}\nonumber |

\end{equation} | \end{equation} | ||

| Line 348: | Line 347: | ||

- Gives all the eigenvalues and eigenvectors, but it is slow | - Gives all the eigenvalues and eigenvectors, but it is slow | ||

| − | - First orthonormalize the | + | - First orthonormalize the <math>N</math>-dimensional basis set; i.e. form linear combinations |

\begin{equation} | \begin{equation} | ||

| Line 402: | Line 401: | ||

with <math>R=TS</math>. | with <math>R=TS</math>. | ||

| − | If H is the matrix with elements | + | If H is the matrix with elements <math>H_{mn} = \langle \varphi_m | \varphi_n \rangle </math>, then H |

expressed in the <math>\Phi_m</math> basis set is | expressed in the <math>\Phi_m</math> basis set is | ||

| Line 409: | Line 408: | ||

\end{equation} | \end{equation} | ||

| − | We next diagonalize | + | We next diagonalize <math>H^\prime</math> by finding an orthogonal transformation W such |

that | that | ||

| Line 422: | Line 421: | ||

\end{equation} | \end{equation} | ||

| − | The | + | The q'th eigenvector is |

\begin{equation} | \begin{equation} | ||

| Line 436: | Line 435: | ||

===The Power Method=== | ===The Power Method=== | ||

| − | - Based on the observation that if H has one eigenvalue, | + | - Based on the observation that if H has one eigenvalue, <math>\lambda_M</math>, much bigger than all the rest, and <math>\chi = \left( |

| − | \begin{array}{c}a_1\\a_2\\\vdots | + | \begin{array}{c}a_1\\a_2\\\vdots\end{array}\right)</math> |

| − | is an arbitrary starting vector, then | + | is an arbitrary starting vector, then <math>\chi = \sum_{q=1}^N x_q\Psi^{(q)}</math>. |

\begin{equation} | \begin{equation} | ||

| Line 444: | Line 443: | ||

\end{equation} | \end{equation} | ||

| − | provided | + | provided <math>x_M\neq 0</math>. |

To pick out the eigenvector corresponding to any eigenvalue, write the original problem in the form | To pick out the eigenvector corresponding to any eigenvalue, write the original problem in the form | ||

| Line 459: | Line 458: | ||

\end{equation} | \end{equation} | ||

| − | where | + | where <math>G=(H-\lambda_qO)^{-1}O</math> with eigenvalues <math>\frac{1}{\lambda_n - \lambda_q}</math>. |

| − | By picking | + | By picking <math>\lambda_q</math> close to any one of the <math>\lambda_n</math>, say |

| − | + | <math>\lambda_{n^\prime}</math>, then <math>\frac{1}{\lambda_n-\lambda_q}</math> is much larger for | |

| − | + | <math>n=n^\prime</math> than for any other value. The sequence is then | |

\begin{array}{c} | \begin{array}{c} | ||

| Line 472: | Line 471: | ||

\end{array} | \end{array} | ||

| − | until the ratios of components in | + | until the ratios of components in <math>\chi_n</math> stop changing. |

- To avoid matrix inversion and multiplication, note that the sequence is equivalent to | - To avoid matrix inversion and multiplication, note that the sequence is equivalent to | ||

| − | |||

\begin{equation} | \begin{equation} | ||

F \chi_n = \left( \lambda - \lambda_q \right) O \chi_{n-1} \nonumber \\ | F \chi_n = \left( \lambda - \lambda_q \right) O \chi_{n-1} \nonumber \\ | ||

\end{equation} | \end{equation} | ||

| − | + | where <math>F = H-\lambda_qO</math>. The factor of <math>\left( \lambda - \lambda_q \right)</math> can be | |

| − | where | + | dropped because this only affects the normalization of <math>\chi_n</math>. To find <math>\chi_n</math>, solve |

| − | dropped because this only affects the normalization of | ||

| − | |||

\begin{equation} | \begin{equation} | ||

F \chi_n = O \chi_{n-1} \nonumber | F \chi_n = O \chi_{n-1} \nonumber | ||

\end{equation} | \end{equation} | ||

| − | |||

(N equations in N unknowns). Then | (N equations in N unknowns). Then | ||

| − | |||

\begin{equation} | \begin{equation} | ||

\lambda = \frac{ \langle \chi_n | H | \chi_n \rangle}{\langle \chi_n | \chi_n \rangle} \nonumber | \lambda = \frac{ \langle \chi_n | H | \chi_n \rangle}{\langle \chi_n | \chi_n \rangle} \nonumber | ||

| Line 510: | Line 504: | ||

[[File:Pic3.jpg|400px]] | [[File:Pic3.jpg|400px]] | ||

| − | defines the complete set of 6 independent variables is | + | defines the complete set of 6 independent variables is <math>r_1</math>, <math>r_2</math>, <math>r_{12}</math>, <math>\theta_1</math>, <math>\varphi_1</math>, <math>\chi</math>. |

| − | If | + | If <math>r_{12}</math> were not an independent variable, then one could take the volume element to be |

\begin{equation} | \begin{equation} | ||

| Line 518: | Line 512: | ||

\end{equation} | \end{equation} | ||

| − | However, | + | However, <math>\theta_2</math> and <math>\varphi_2</math> are no longer independent variables. To eliminate them, take the point <math>{\bf r}_1</math> as the |

origin of a new polar co-ordinate system, and write | origin of a new polar co-ordinate system, and write | ||

| Line 531: | Line 525: | ||

\end{equation} | \end{equation} | ||

| − | Then for fixed | + | Then for fixed <math>r_1</math> and <math>r_{12}</math>, |

\begin{equation} | \begin{equation} | ||

| Line 550: | Line 544: | ||

\end{align} | \end{align} | ||

| − | Consider first the angular integral. | + | Consider first the angular integral. <math>Y^{m_2}_{l_2} \left( \theta_2, \varphi_2 \right)</math> can be expressed in terms of the independent variables <math>\theta_1, \varphi_1, \chi</math> by use of the rotation matrix relation |

| − | |||

\begin{equation} | \begin{equation} | ||

Y^{m_2}_{l_2}( \theta_2, \varphi_2) = \sum_m \mathcal{D}^{(l_2)}_{m_2,m}(\varphi_1,\theta_1,\chi)^*Y^m_{l_2}( \theta_{12}, \varphi) | Y^{m_2}_{l_2}( \theta_2, \varphi_2) = \sum_m \mathcal{D}^{(l_2)}_{m_2,m}(\varphi_1,\theta_1,\chi)^*Y^m_{l_2}( \theta_{12}, \varphi) | ||

\end{equation} | \end{equation} | ||

| − | + | where <math>\theta, \varphi</math> are the polar angles of <math>{\bf r}_2</math> relative to <math>{\bf r}_1</math>. The angular integral is then | |

| − | where | ||

\begin{align} | \begin{align} | ||

| Line 589: | Line 581: | ||

\end{equation} | \end{equation} | ||

| − | Note that | + | Note that <math>P_{l_2} \left( \cos \theta \right)</math> is just a short hand expression for a radial function because |

\begin{equation} | \begin{equation} | ||

| Line 609: | Line 601: | ||

is a purely radial function. | is a purely radial function. | ||

| − | The above would become quite complicated for large | + | The above would become quite complicated for large <math>l_2</math> because <math>P_{l_2}(\cos \theta_{12})</math> contains terms up to <math>\left( \cos \theta_{12} \right)^{l_2}</math>. However, recursion relations exist which allow any integral containing <math>P_l \left( \cos \theta_{12} \right)</math> in terms of those containing just <math>P_0 \left( \cos \theta_{12} \right) = 1</math> and <math>P_1 \left( \cos \theta_{12} \right) = \cos \theta_{12}</math>. |

==Radial Integrals and Recursion Relations== | ==Radial Integrals and Recursion Relations== | ||

| − | The basic radial integral is | + | The basic radial integral is [see G.W.F. Drake, Phys. Rev. A 18, 820 (1978)] |

\begin{align} | \begin{align} | ||

| Line 633: | Line 625: | ||

\end{array} | \end{array} | ||

| − | The above applies for | + | The above applies for <math> a, b \geq -2, c\geq -1</math>. |

| − | + | <math>[x]</math> means "greatest integer in" <math>x</math>. | |

Then | Then | ||

| Line 669: | Line 661: | ||

\end{equation} | \end{equation} | ||

| − | Here | + | Here <math>x=\cos\theta_{12}</math> and |

\begin{align} | \begin{align} | ||

| Line 682: | Line 674: | ||

\end{equation} | \end{equation} | ||

| − | The | + | The <math>r_{12}</math> part of the integral is |

\begin{align} | \begin{align} | ||

| Line 694: | Line 686: | ||

\cos \theta_{12} = \frac{r_{1}^{2} + r_{2}^{2} - r_{12}^{2}}{2 r_{1} r_{2}} | \cos \theta_{12} = \frac{r_{1}^{2} + r_{2}^{2} - r_{12}^{2}}{2 r_{1} r_{2}} | ||

\begin{array}{lll} | \begin{array}{lll} | ||

| − | & = -1 & when & r_{12}^{2} = \left( r_{1} + r_{2} \right)^{2} \\ | + | & = -1 & {\rm when} & r_{12}^{2} = \left( r_{1} + r_{2} \right)^{2} \\ |

| − | & = 1 & when & r_{12}^{2} = \left( r_{1} - r_{2} \right)^{2} | + | & = 1 & {\rm when} & r_{12}^{2} = \left( r_{1} - r_{2} \right)^{2} |

\end{array} | \end{array} | ||

\end{equation} | \end{equation} | ||

| − | and | + | and <math>P_l(1)=1</math>, <math>P_{l}(-1)=(-1)^{l}</math>. |

Thus, | Thus, | ||

| Line 713: | Line 705: | ||

\end{equation} | \end{equation} | ||

| − | then | + | then [G.W.F. Drake, Phys. Rev. A 18, 820 (1978)] |

\begin{equation} | \begin{equation} | ||

| Line 720: | Line 712: | ||

\end{equation} | \end{equation} | ||

| − | For the case | + | For the case <math>c=-2</math>, take |

\begin{equation} | \begin{equation} | ||

| Line 732: | Line 724: | ||

\end{equation} | \end{equation} | ||

| − | This allows all | + | This allows all <math>I_{l}</math> integrals to be calculated from tables of <math>I_0</math> and |

| − | + | <math>I_1</math> integrals. | |

===The General Integral=== | ===The General Integral=== | ||

| Line 784: | Line 776: | ||

\end{equation} | \end{equation} | ||

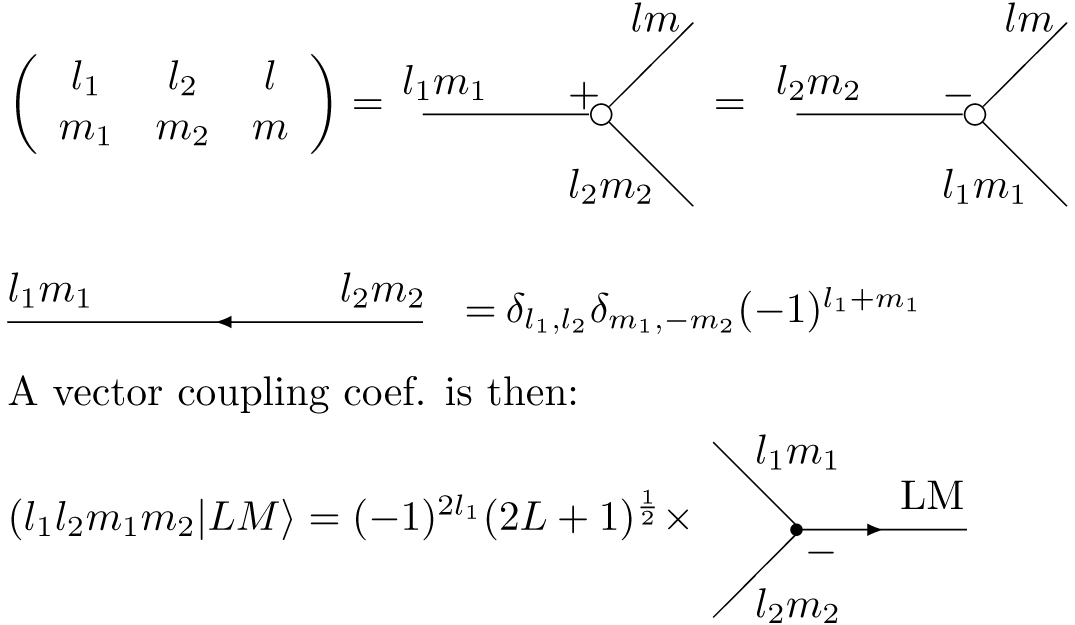

| − | is a 3- | + | is a 3-<math>j</math> symbol. In particular, write |

\begin{array}{cc} | \begin{array}{cc} | ||

| Line 794: | Line 786: | ||

\end{array} | \end{array} | ||

| − | The angular integral then gives a factor of | + | The angular integral then gives a factor of <math>2 \pi \delta_{\Lambda, \Lambda^{\prime}} \delta_{M, M^{\prime}} P_{\Lambda} \left( \cos \theta_{12} \right)</math>. The total integral therefore reduces to the form |

\begin{equation} | \begin{equation} | ||

| Line 800: | Line 792: | ||

\end{equation} | \end{equation} | ||

| − | where | + | where <math>C_{\Lambda} = \sum_{\lambda_{1}, \lambda_{2}} C_{ \lambda_{1}, \lambda_{2}, \Lambda}</math>. For further details and derivations, including graphical representations, see G.W.F. Drake, Phys. Rev. A <math>{\bf 18}</math>, 820 (1978). |

==Graphical Representation== | ==Graphical Representation== | ||

| Line 819: | Line 811: | ||

\end{equation} | \end{equation} | ||

| − | where | + | where <math>r \equiv r_{12}</math> and <math>\cos\theta \equiv \cos\theta_{12}</math>. Also in what follows, define <math>\hat{\nabla}^Y_1 \equiv r_1\nabla^Y_1</math> where <math>\nabla^Y</math> operates only on the spherical harmonic part of the wave function, and similarly for <math>\vec{l}_1^Y</math>. |

A general matrix element is | A general matrix element is | ||

| Line 827: | Line 819: | ||

\end{equation} | \end{equation} | ||

| − | Since | + | Since <math>\nabla^2_1</math> is rotationally invariant, this vanishes unless <math>L=L^\prime</math>, <math>M=M^\prime</math>. Also <math>\nabla_1^2</math> is Hermitian, so that the result must be the same whether it operates to the right or left, even though the results look very different. In fact, requiring the results to be the same yields some interesting and useful integral identities as follows: |

Put | Put | ||

| Line 844: | Line 836: | ||

\begin{equation} | \begin{equation} | ||

| − | \nabla^2_1 \mathcal{F} = \left\{ \frac{1}{r_1^2} \left[ a \left( a + 1 \right) - l_1 \left( l_1 + 1 \right) \right] + \frac{c \left( c + 1 \right)}{r^2} + \alpha^2\frac{2\alpha \left( a + 1 \right)}{r_1} + \frac{2 \left(r_1 - r_2 \cos \theta \right) }{r_1r^2}c \left | + | \nabla^2_1 \mathcal{F} = \left\{ \frac{1}{r_1^2} \left[ a \left( a + 1 \right) - l_1 \left( l_1 + 1 \right) \right] + \frac{c \left( c + 1 \right)}{r^2} + \alpha^2 + \frac{2\alpha \left( a + 1 \right)}{r_1} + \frac{2 \left(r_1 - r_2 \cos \theta \right) }{r_1r^2}c \left( a - \alpha r_1 \right) - \frac{2c}{r^2} \left( \hat{\nabla}^Y_1 \cdot \hat{r}_2 \right) \frac{r_2}{r_1} \right\} \mathcal{F} |

\end{equation} | \end{equation} | ||

| Line 860: | Line 852: | ||

\end{equation} | \end{equation} | ||

| − | For brevity, let the sum over | + | For brevity, let the sum over <math>\Lambda</math> and the radial integrations be understood, and let <math>\left( \nabla_1^2 \right)</math> stand for the terms which appear in the integrand. Then operating to the right gives |

\begin{equation} | \begin{equation} | ||

| Line 881: | Line 873: | ||

\end{array} | \end{array} | ||

| − | etc., and substitute | + | etc., and substitute <math>a^\prime = a_+ - a</math>, <math>c^\prime = c_+ - c</math>, and <math>\alpha^\prime = \alpha_+ - \alpha</math> in <math>(\nabla^2_1)_L</math>. If <math>a_+</math>, <math>c_+</math> and <math>\alpha_+</math> are held fixed, then the equation |

\begin{equation} | \begin{equation} | ||

| Line 887: | Line 879: | ||

\end{equation} | \end{equation} | ||

| − | must be true for arbitrary | + | must be true for arbitrary <math>a</math>, <math>c</math>, and <math>\alpha</math>. Their coefficients must thus vanish. |

This yields the integral relations | This yields the integral relations | ||

| Line 896: | Line 888: | ||

\end{equation} | \end{equation} | ||

| − | from the coefficient of | + | from the coefficient of <math>a</math> and |

\begin{equation} | \begin{equation} | ||

| Line 903: | Line 895: | ||

\end{equation} | \end{equation} | ||

| − | from the coefficient of | + | from the coefficient of <math>c</math>. The coefficient of <math>\alpha</math> gives an equation equivalent to (I). |

Furthermore, if can be show that (see problem) | Furthermore, if can be show that (see problem) | ||

| Line 912: | Line 904: | ||

\end{align} | \end{align} | ||

| − | and similarly for | + | and similarly for <math>\left( \hat{r}_2 \cdot \hat{\nabla}^{Y\prime}_1 \right)</math> with <math>l_1</math> and <math>l_1^\prime</math> interchangeable, then it follows that |

\begin{equation} | \begin{equation} | ||

| Line 924: | Line 916: | ||

\end{equation} | \end{equation} | ||

| − | where equality applies after integration and summation over | + | where equality applies after integration and summation over <math>\Lambda</math>. |

| − | Thus | + | Thus (II) becomes |

\begin{equation}\label{IIa} | \begin{equation}\label{IIa} | ||

| Line 941: | Line 933: | ||

\end{align} | \end{align} | ||

| − | where | + | where the coefficients <math>C_\Lambda \left( 1 \right)</math> are the angular coefficients from the overlap integral |

\begin{align} | \begin{align} | ||

| − | \int & d\Omega \mathcal{Y}^{M*}_{l_1^\prime l_2^\prime L}(\hat{r}_1,\hat{r}_2) \mathcal{Y}^{M}_{l_1 l_2 L}(\hat{r}_1,\hat{r}_2)\\ | + | \int & d\Omega\, \mathcal{Y}^{M*}_{l_1^\prime l_2^\prime L}(\hat{r}_1,\hat{r}_2)\, \mathcal{Y}^{M}_{l_1 l_2 L}(\hat{r}_1,\hat{r}_2)\\ |

= & \sum_\Lambda C_\Lambda(1)P_\Lambda(\cos\theta_{12}). | = & \sum_\Lambda C_\Lambda(1)P_\Lambda(\cos\theta_{12}). | ||

\end{align} | \end{align} | ||

| Line 957: | Line 949: | ||

It is also useful to use | It is also useful to use | ||

<math>\begin{eqnarray}\ \ | <math>\begin{eqnarray}\ \ | ||

| − | (\cos^2\theta-1)P_L(\cos\theta) = | + | \cos\theta\, P_L(\cos\theta) &=& \frac{1}{2L+1}[L\,P_{L-1}(\cos\theta) + (L+1)P_{L+1}(\cos\theta)] |

| + | \\ | ||

| + | (\cos^2\theta-1)P_L(\cos\theta) &=& | ||

\frac{L(L-1)}{(2L-1)(2L+1)}P_{L-2}(\cos\theta)\nonumber | \frac{L(L-1)}{(2L-1)(2L+1)}P_{L-2}(\cos\theta)\nonumber | ||

| − | -\frac{2(L^2+L-1)}{(2L-1)(2L+3)}P_L(\cos\theta) + \frac{(L+1)(L+2)}{(2L+1)(2L+3)}P_{L+2}(\cos\theta)\nonumber | + | -\frac{2(L^2+L-1)}{(2L-1)(2L+3)}P_L(\cos\theta) + \frac{(L+1)(L+2)}{(2L+1)(2L+3)}P_{L+2}(\cos\theta)\nonumber\\ |

| + | &=& \frac{L(L-1)}{(2L-1)(2L+1)}[P_{L-2}(\cos\theta) - P_L(\cos\theta)] | ||

| + | + \frac{(L+1)(L+2)}{(2L+1)(2L+3)}[P_{L+2}(\cos\theta) - P_L(\cos\theta)] | ||

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

| − | together with the integral recursion relation | + | together with a double application of the integral recursion relation |

<math> | <math> | ||

I_{L+1}\left(\frac{1}{r}\frac{d}{dr}q(r)\right) = | I_{L+1}\left(\frac{1}{r}\frac{d}{dr}q(r)\right) = | ||

| Line 968: | Line 964: | ||

</math> | </math> | ||

| − | Of course <math>l_1^2\mathcal{Y}^M_{l_1l_2L}(\hat{r}_1,\hat{r}_2)=l_1(l_1+1)\mathcal{Y}^M_{l_1l_2L}(\hat{r}_1,\hat{r}_2)</math>. | + | Of course <math>l_1^2\mathcal{Y}^M_{l_1l_2L}(\hat{r}_1,\hat{r}_2)=l_1(l_1+1)\mathcal{Y}^M_{l_1l_2L}(\hat{r}_1,\hat{r}_2)</math>. Begin by expanding |

| + | <math> | ||

| + | l_1^2(g\mathcal{Y}) = \mathcal{Y}l_1^2g + g\,l_1^2\mathcal{Y} + 2(\vec{l}_1g)\cdot(\vec{l}_1\mathcal{Y}) | ||

| + | </math>, and show that | ||

| + | |||

| + | <math> | ||

| + | \begin{eqnarray}\ \ | ||

| + | (\vec{l}_1g)\cdot(\vec{l}_1\mathcal{Y}) & = & \frac{r_1^2}{r}\frac{dg}{dr}\vec{r}_2\cdot \nabla_1\mathcal{Y}\,\,\,\,\mbox{ and}\\ | ||

| + | l_1^2g(r) &=& 2r_1r_2\cos\theta\frac{1}{r}\frac{dg}{dr} + r_1^2r_2^2(\cos^2\theta - 1) | ||

| + | \frac{1}{r}\frac{d}{dr}\left(\frac{1}{r}\frac{dg}{dr}\right). | ||

| + | \end{eqnarray} | ||

| + | </math> | ||

| + | |||

| + | The proof amounts to showing that the term <math>l_1^2g(r)</math> can be replaced by | ||

| + | <math>\Lambda(\Lambda+1)g(r)</math> after multiplying by <math>P_\Lambda(\cos\theta)</math> | ||

| + | and integrating by parts with respect to the radial integrations over <math>r_1</math>, <math>r_2</math> and | ||

| + | <math>r \equiv |\vec{r}_1 - \vec{r}_2|</math>. Remember that <math>\cos\theta</math> is just a short-hand notation for the radial function <math>(r_1^2 + r_2^2 - r^2)/(2r_1r_2)</math>. | ||

==General Hermitian Property== | ==General Hermitian Property== | ||

Each combination of terms of the form | Each combination of terms of the form | ||

| − | + | \begin{equation} | |

| − | <f>_R = a^2f_1 af_2+abf_3+b^2f_4+bf_5+af_6\nabla_1^ | + | <f>_R = a^2f_1+af_2+abf_3+b^2f_4+bf_5+af_6\nabla_1^Y+bf_7\nabla^Y_2+f_8(Y) |

| − | + | \end{equation} | |

can be rewritten | can be rewritten | ||

| − | + | \begin{equation} | |

| − | <f>_L= (a_+-a)^2f_1+(a_+-a)f_2 + (a_+-a)(b_+-b)f_3+(b_+-b)^2f_4 | + | <f>_L= (a_+-a)^2f_1+(a_+-a)f_2 + (a_+-a)(b_+-b)f_3+(b_+-b)^2f_4 |

| − | +(b_+-b)f_5+(a_+-a)f_6\nabla^{ | + | +(b_+-b)f_5+(a_+-a)f_6\nabla^{Y\prime}_1+(b_+-b)f_7\nabla^{Y\prime}_2+f_8)Y^\prime |

| − | \end{ | + | \end{equation} |

| − | + | where as usual <math>\nabla_1^Y</math> acts only on the spherical harmonic part of the wave function denoted for short by <math>Y</math>. | |

Since these must be equal for arbitrary <math>a</math> and <math>b</math>, | Since these must be equal for arbitrary <math>a</math> and <math>b</math>, | ||

| − | + | \begin{equation} | |

| − | a_+^2f_1+a_+f_2+_+b_+f_3+b_+f_4+b_+f_5+a_+f_6\nabla^{ | + | a_+^2f_1+a_+f_2+_+b_+f_3+b_+f_4+b_+f_5+a_+f_6\nabla^{Y\prime}_1 + |

| − | b_+f_7\nabla^{ | + | b_+f_7\nabla^{Y\prime}_2+f_8(Y^\prime)-f_8(Y)=0 |

| − | + | \end{equation} | |

| − | Adding the corresponding expression with <math> | + | Adding the corresponding expression with <math>Y</math> and <math>Y^\prime</math> interchanged |

yields | yields | ||

<math>\label{III} | <math>\label{III} | ||

| Line 995: | Line 1,007: | ||

Subtracting gives | Subtracting gives | ||

| − | + | \begin{eqnarray*} | |

| − | f_8( | + | f_8(Y)-f_8(Y^\prime)&=&-\frac{1}{2}[a_+f_6\nabla^-_1+b_+f_7\nabla_2^-]\tag{IV}\\ |

| − | + | a[-2a_+f_1-2f_2-b_+f_3-f_6\nabla^+_1]&=&0\tag{V}\\ | |

| − | + | b[-2b_+f_4-2f_5-a_+f_3-f_7\nabla^+_2]&=&0\tag{VI}\\ | |

| − | a[-2a_+f_1-2f_2-b_+f_3-f_6 | + | \end{eqnarray*} |

| − | |||

| − | |||

| − | b[-2b_+f_4-2f_5-a_+f_3-f_7\nabla^+_2]=0\tag{VI} | ||

| − | |||

Adding the two forms gives | Adding the two forms gives | ||

| Line 1,010: | Line 1,018: | ||

<f>_R+<f>_L=\frac{1}{2}(a_+^2+a_-^2)f_1+a_+f_2+\frac{1}{2}(a_+b_++a_-b_-)f_3\nonumber\\ | <f>_R+<f>_L=\frac{1}{2}(a_+^2+a_-^2)f_1+a_+f_2+\frac{1}{2}(a_+b_++a_-b_-)f_3\nonumber\\ | ||

\ +\frac{1}{2}(b_+^2+b_-^2)f_4+b_+f_5+\frac{1}{2}f_6(a_+\nabla_1^++a_-\nabla^-_1)\nonumber\\ | \ +\frac{1}{2}(b_+^2+b_-^2)f_4+b_+f_5+\frac{1}{2}f_6(a_+\nabla_1^++a_-\nabla^-_1)\nonumber\\ | ||

| − | \ +\frac{1}{2}(b_+\nabla_2^++b_-\nabla^-_2)+f_8( | + | \ +\frac{1}{2}(b_+\nabla_2^++b_-\nabla^-_2)+f_8(Y)+f_8(Y^\prime)\nonumber |

\end{eqnarray} | \end{eqnarray} | ||

| − | Subtracting <math> | + | Subtracting <math>\frac{x}{2}\times({\rm I})</math> gives |

\begin{eqnarray}\ \ | \begin{eqnarray}\ \ | ||

| Line 1,019: | Line 1,027: | ||

(1-\frac{x}{2})a_+f_2+\frac{1}{2}[(1-x)a_+b_++a_-b_-]f_3\nonumber\\ | (1-\frac{x}{2})a_+f_2+\frac{1}{2}[(1-x)a_+b_++a_-b_-]f_3\nonumber\\ | ||

+\frac{1}{2}[(1-x)b_+^2+b_-^2]f_4+(1-\frac{x}{2})f_5b_++\frac{1}{2}f_6[(1-\frac{x}{2})a_+\nabla_1^++a_-\nabla^-_1]\nonumber\\ | +\frac{1}{2}[(1-x)b_+^2+b_-^2]f_4+(1-\frac{x}{2})f_5b_++\frac{1}{2}f_6[(1-\frac{x}{2})a_+\nabla_1^++a_-\nabla^-_1]\nonumber\\ | ||

| − | +\frac{1}{2}[(1-\frac{x}{2})b_+\nabla^+_2+b_-\nabla^-_2]+f_8( | + | +\frac{1}{2}[(1-\frac{x}{2})b_+\nabla^+_2+b_-\nabla^-_2]+f_8(Y)+f_8(Y^\prime)\nonumber |

\end{eqnarray} | \end{eqnarray} | ||

| Line 1,026: | Line 1,034: | ||

<f>_R+<f>_L &=& | <f>_R+<f>_L &=& | ||

\frac{1}{2}[a_-^2f_1+a_+f_2+a_-b_-f_3+b_-^2f_4+b_+f_5\nonumber\\ | \frac{1}{2}[a_-^2f_1+a_+f_2+a_-b_-f_3+b_-^2f_4+b_+f_5\nonumber\\ | ||

| − | &+&\left(\frac{1}{2}a_+\nabla^+_1+a_-\nabla^-_1\right)f_6+\left(\frac{1}{2}b_+\nabla^+_2+b_-\nabla^-_2\right)f_7]+f_8( | + | &+&\left(\frac{1}{2}a_+\nabla^+_1+a_-\nabla^-_1\right)f_6+\left(\frac{1}{2}b_+\nabla^+_2+b_-\nabla^-_2\right)f_7]+f_8(Y)+f_8(Y^\prime)\nonumber |

\end{eqnarray} | \end{eqnarray} | ||

| Line 1,081: | Line 1,089: | ||

<math> | <math> | ||

B_1 = | B_1 = | ||

| − | 2\left[(1-x)\alpha_+(a_++2)-\alpha_-a_--2(1-\frac{x}{2})\alpha_++\frac{c_-}{c_+}[a_-\alpha_++\alpha_(a_++2)]\right] | + | 2\left[(1-x)\alpha_+(a_++2)-\alpha_-a_--2(1-\frac{x}{2})\alpha_++\frac{c_-}{c_+}[a_-\alpha_++\alpha_-(a_++2)]\right] |

</math> | </math> | ||

| Line 1,089: | Line 1,097: | ||

<math> | <math> | ||

| − | D_1 = - | + | D_1 = -(1-x)\alpha_+^2 +\alpha_-^2 - 2\frac{c_-}{c_+}\alpha_-\alpha_+ |

</math> | </math> | ||

| Line 1,223: | Line 1,231: | ||

<math>\begin{eqnarray}\\ | <math>\begin{eqnarray}\\ | ||

| − | E(1s8d^1D)= -2. | + | E(1s8d^1D)&=& -2.007\,816\,512\,563\,81 {\rm\ a.u.}\nonumber\\ |

| − | E(1s8d^3D)= - 2. | + | E(1s8d^3D)&=& - 2.007\,817\,934\,711\,71 {\rm\ a.u.}\nonumber\\ |

| − | E_{SH} = -2. | + | E_{SH} &=& -2.007\,812\,5 {\rm\ a.u.} \nonumber |

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

| Line 1,233: | Line 1,241: | ||

\Psi = c_0\Psi_0 + | \Psi = c_0\Psi_0 + | ||

\sum_{ijk}\left[c_{ijk}^{(1)}\varphi_{ijk}(\alpha_1,\beta_1)+c_{ijk}^{(2)}\varphi(\alpha_2,\beta_2)\right]\pm | \sum_{ijk}\left[c_{ijk}^{(1)}\varphi_{ijk}(\alpha_1,\beta_1)+c_{ijk}^{(2)}\varphi(\alpha_2,\beta_2)\right]\pm | ||

| − | exchange | + | {\rm exchange} |

</math> | </math> | ||

| Line 1,278: | Line 1,286: | ||

<math>i=0</math> term is the dominant contribution to <math>f(p,q;\beta)</math>. However, since | <math>i=0</math> term is the dominant contribution to <math>f(p,q;\beta)</math>. However, since | ||

this term depends only on the sum of powers <math>b+c</math> for <math>r_2</math>, it cancels exactly | this term depends only on the sum of powers <math>b+c</math> for <math>r_2</math>, it cancels exactly | ||

| − | from the matrix element of <math> | + | from the matrix element of |

| − | be omitted in the calculations of integrands, | + | <math> |

| + | \frac{1}{r_{12}} - \frac{1}{r_2} | ||

| + | </math> | ||

| + | and can therefore | ||

| + | be omitted in the calculations of integrands, thereby saving many significant | ||

figures. This is especially valuable when <math>b+c</math> is large and <math>a</math> is small. | figures. This is especially valuable when <math>b+c</math> is large and <math>a</math> is small. | ||

| Line 1,321: | Line 1,333: | ||

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

| − | If <math>n=2</math>, the | + | If <math>n=2</math>, the Schrödinger equation is |

<math>\begin{eqnarray}\ \ \ | <math>\begin{eqnarray}\ \ \ | ||

\left[ -\frac{\hbar^2}{2\mu}(\nabla^2_{s_1}+ \nabla^2_{s_2}) - | \left[ -\frac{\hbar^2}{2\mu}(\nabla^2_{s_1}+ \nabla^2_{s_2}) - | ||

| − | \frac{\hbar^2}{M}\nabla_{s_1}\nabla_{s_2}-\frac{Ze^2}{s_1}-\frac{Ze^2}{s_2}+\frac{e^2}{ | + | \frac{\hbar^2}{M}\nabla_{s_1}\nabla_{s_2}-\frac{Ze^2}{s_1}-\frac{Ze^2}{s_2}+\frac{e^2}{s_{12}}\right]\psi |

= E\psi | = E\psi | ||

\end{eqnarray}</math>. | \end{eqnarray}</math>. | ||

| Line 1,330: | Line 1,342: | ||

Define the reduced mass Bohr radius | Define the reduced mass Bohr radius | ||

<math>\begin{eqnarray} | <math>\begin{eqnarray} | ||

| − | a_\mu = \frac{hbar^2}{\mu e^2} = \frac{m}{\mu}a_0 | + | a_\mu = \frac{\hbar^2}{\mu e^2} = \frac{m}{\mu}a_0 |

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

and | and | ||

| Line 1,339: | Line 1,351: | ||

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

| − | The | + | The Schrödinger equation then becomes |

<math>\begin{eqnarray}\ \ \ | <math>\begin{eqnarray}\ \ \ | ||

\left[ | \left[ | ||

| Line 1,345: | Line 1,357: | ||

\end{eqnarray}</math> | \end{eqnarray}</math> | ||

| − | The effects of finite | + | The effects of finite mass are thus |

| − | 1. A reduced mass correction to all energy levels of <math>(e^2/ | + | 1. A reduced mass correction to all energy levels of <math>(e^2/a_\mu)/(e^2/a_0) |

| − | = \mu/m</math>, | + | = \mu/m</math>, i.e. |

<math>\begin{eqnarray} | <math>\begin{eqnarray} | ||

E_M=\frac{\mu}{m}E_\infty | E_M=\frac{\mu}{m}E_\infty | ||

| Line 1,369: | Line 1,381: | ||

<math>{\bf\rho}_1 \cdot {\bf\rho}_2</math>, the operator is like the product of two | <math>{\bf\rho}_1 \cdot {\bf\rho}_2</math>, the operator is like the product of two | ||

dipole operators. For product type wave functions of the form | dipole operators. For product type wave functions of the form | ||

| − | + | $ | |

| − | \psi = \psi(1s)\psi(nl) \pm exchange | + | \psi = \psi(1s)\psi(nl) \pm \mbox{exchange}, |

| − | </math> | + | $ |

| − | the matrix element | + | the matrix element vanishes for all but P-states. |

| + | |||

| + | =Radiative Transitions= | ||

| + | |||

| + | ==Transition Integrals and the Line Strength== | ||

| + | |||

| + | For an electric dipole transition <math>\gamma'\,L'\,S'\,J'\,M' \rightarrow \gamma\,L\,S\,J\,M</math>, the line strength is defined by | ||

| + | \begin{equation} | ||

| + | S = \sum_{M',\mu,M}|\langle\gamma'\,L'\,S'\,J'\,M'|r_\mu|\gamma\,L\,S\,J\,M\rangle|^2 | ||

| + | \end{equation} | ||

| + | Using the Wigner-Eckart theorem, this is related to the reduced matrix element by | ||

| + | \begin{eqnarray} | ||

| + | S(J',J) = |\langle\gamma'\,L'\,S'\,J'||r||\gamma\,L\,S\,J\,M\rangle|^2 | ||

| + | \sum_{M',\mu,M}\left|\left(\matrix{J' & 1 & J \cr | ||

| + | -M'& \mu &M \cr}\right)\right|^2 | ||

| + | \end{eqnarray} | ||

| + | Using the sum rule for the 3-<math>j</math> symbols | ||

| + | \begin{equation} | ||

| + | \sum_{M',\mu,M}\left|\left(\matrix{J' & 1 & J \cr | ||

| + | -M'& \mu &M \cr}\right)\right|^2 = 1 | ||

| + | \end{equation} | ||

| + | we see that the line strength is identical to the reduced matrix element | ||

| + | \begin{equation} | ||

| + | S(J',J) = |\langle\gamma'\,L'\,S'\,J'||r||\gamma\,L\,S\,J\,M\rangle|^2 | ||

| + | \end{equation} | ||

| + | For a nonrelativistic calculation in <math>LS</math> coupling, <math>S = S'</math> and we can next strip out the dependence on <math>J</math> and <math>J'</math> by use of Eq.\ (7.1.7) of Edmonds {<i> Angular Momentum in Quantum Mechanics</i>} with <math>k = 1</math> to obtain | ||

| + | \begin{equation} | ||

| + | S(J',J) = (-1)^{L'+S+J+1}[(2J+1)(2J'+1)]^{1/2}\left\{ | ||

| + | \matrix{L' & J'& S\cr | ||

| + | J & L & 1\cr}\right\}\langle\gamma' L'|| r || \gamma L\rangle | ||

| + | \end{equation} | ||

| + | where <math>\langle\gamma' L'|| r || \gamma L\rangle</math> is the reduced matrix element if spin is neglected. Note that if spin is neglected, then the line-strength is simply | ||

| + | \begin{equation} | ||

| + | S(L',L) = |\langle\gamma' L'|| r || \gamma L\rangle|^2 | ||

| + | \end{equation} | ||

| + | To calculate the reduced matrix element, use a particular example along with the Wigner-Eckart theorem | ||

| + | such as | ||

| + | \begin{equation} | ||

| + | \langle\gamma'\, L'|| r || \gamma\, L\rangle = (-1)^{L'}\langle\gamma'\, L'\, 0| z | \gamma\, L\, 0\rangle{\Large /}\left(\matrix{L' & 1 & L \cr | ||

| + | 0 & 0 & 0 \cr}\right) | ||

| + | \end{equation} | ||

| + | |||

| + | ===Correlated Two-electron Integrals=== | ||

| + | For the case of two-electron integrals, <math>z = z_1 + z_2</math> and <math>r = r_1 + r_2</math>. As discussed in G.W.F. Drake, Phys. Rev. A <strong>18</strong>, 820 (1978), the general integral in Hylleraas coordinates for the integral over the <math>z_1</math> term can be written in the form | ||

| + | \begin{equation} | ||

| + | \label{int1} | ||

| + | \langle\gamma'\, L'\, 0| z_1 | \gamma\, L\, 0\rangle = \sum_\Lambda C_\Lambda I_\Lambda(\gamma',\gamma) | ||

| + | \end{equation} | ||

| + | where <math>I_\Lambda(\gamma',\gamma)</math> stands for the purely radial integral | ||

| + | \begin{equation} | ||

| + | \label{radial} | ||

| + | I_\Lambda(\gamma',\gamma) = \int_0^\infty r_1\,dr_1\int_0^\infty r_2\,dr_2\int_{|r_1-r_2|}^{r_1+r_2}r_{12}\, dr_{12} | ||

| + | R_{\gamma'}^*\,r_1\, R_\gamma P_\Lambda(\cos\theta_{12}) | ||

| + | \end{equation} | ||

| + | and <math>\cos\theta_{12} = (r_1^2 + r_2^2 - r_{12}^2)/(2r_1r_2)</math> is a short-hand notation for a radial function of <math>r_1</math>, <math>r_2</math> and <math>r_{12}</math>. Also, <math>P_\Lambda(\cos\theta_{12})</math> is a Legendre polynomial, | ||

| + | and, <math>R_\gamma = R_\gamma(r_1,r_2,r_{12})</math> is the radial part of the Hylleraas wave function for state <math>\gamma</math> such | ||

| + | that the total wave function is <math>\psi({\bf r}_1,{\bf r}_2) = R_\gamma(r_1,r_2,r_{12}){\cal Y}_{l_1,l_2,L}^M(\hat{\bf r}_1,\hat{\bf r}_2)\pm {\rm exchange}</math>, normalized to unity, and <math>{\cal Y}_{l_1,l_2,L}^M(\hat{\bf r}_1,\hat{\bf r}_2)</math> is a vector coupled product of spherical harmonics. The coefficents <math>C_\Lambda</math> in Eq.\ (\ref{int1}) can be calculated from Eq.\ (32) of the above 1978 paper. They need to be calculated separately for he direct-direct and the direct-exchange contributions. For the reduced matrix element of <math>z_1</math>, the leading 3-<math>j</math> symbol can be dropped, leaving (for the case <math>k = 1</math>) | ||

| + | \begin{eqnarray} | ||

| + | C_\Lambda^{\rm reduced} &=& \frac{(-1)^{l_2'+L'+L+1}}{2} (l_1,l_1',l_2,l_2',L,L')^{1/2} | ||

| + | \sum_{\lambda={\rm max}(l_1-1,0)}^{l_1+1} (\lambda,\Lambda,1) | ||

| + | \left(\matrix{l_1 & 1 & \lambda \cr | ||

| + | 0 & 0 & 0 \cr}\right) | ||

| + | \left(\matrix{l_1'& \lambda & \Lambda \cr | ||

| + | 0 & 0 & 0 \cr}\right)\nonumber\\ | ||

| + | &\times&\left(\matrix{l_2' & 1_2 & \Lambda \cr | ||

| + | 0 & 0 & 0 \cr}\right) | ||

| + | \left\{\matrix{L' & \lambda & l_2 \cr | ||

| + | \Lambda& l_2' & l_1' \cr}\right\} | ||

| + | \left\{\matrix{L' & \lambda & l_2 \cr | ||

| + | l_1 & L & 1 \cr}\right\} | ||

| + | \end{eqnarray} | ||

| + | where the notation <math>(a,b,c,\cdots)</math> means <math>(2a+1)(2b+1)(2c+1)\cdots</math>. | ||

| + | A similar expression can be obtained for the angular coefficients for the <math>z_2</math> term by interchanging the subscripts 1 and 2 throughout and multiplying by <math>(-1)^{L+L'+1}</math>. | ||

| + | |||

| + | ===Example=== | ||

| + | As an example, for the <math>1s^2\;^1S - 1s2p\;^1P</math> transition of helium, the only nonvanishing <math>C_\Lambda</math> angular coefficients are <math>C_1 = 1/2</math> for the direct-direct term (i.e. <math>P_1(\cos\theta_{12}) = \cos\theta_{12}</math> is included in the radial integral (\ref{radial})) and <math>C_0 = 1/2</math> for the direct-exchange term (i.e. <math>P_0(\cos\theta_{12}) = 1</math> is included in the radial integral (\ref{radial})). The results for infinite nuclear mass are | ||

| + | \begin{equation} | ||

| + | S(1\;^1S-2\;^1P) = (0.781\,127\,158\,667)^2 | ||

| + | \end{equation} | ||

| + | \begin{equation}f(1\;^1S-2\;^1P) = -0.276\,164\,703\,736 | ||

| + | \end{equation} | ||

| + | where | ||

| + | \begin{equation} | ||

| + | f(1\;^1S-2\;^1P) = \frac{2}{3}[E(1\;^1S) - E(2\;^1P)]S(1\;^1S-2\;^1P) | ||

| + | \end{equation} | ||

| + | is the absorption oscillator strength. | ||

Latest revision as of 00:51, 23 September 2024

Contents

- 1 Notes on solving the Schrödinger equation in Hylleraas coordinates for heliumlike atoms

Gordon W.F. Drake

Department of Physics, University of Windsor

Windsor, Ontario, Canada N9B 3P4

(Transcribed from hand-written notes and edited by Lauren Moffatt. Last revised November 22, 2017 to correct a missing subscript in the expression for $B_1$ in the Hamiltonian in Sect. 11 General Hermitian Property.)

- 1.1 Introduction

- 1.2 The Hartree Fock Method

- 1.3 Configuration Interaction

- 1.4 Hylleraas Coordinates

- 1.5 Completeness

- 1.6 Solutions of the Eigenvalue Problem

- 1.7 Matrix Elements of H

- 1.8 Radial Integrals and Recursion Relations

- 1.9 Graphical Representation

- 1.10 Matrix Elements of H

- 1.11 General Hermitian Property

- 1.12 Optimization of Nonlinear Parameters

- 1.13 The Screened Hydrogenic Term

- 1.14 Small Corrections

- 2 Radiative Transitions

Notes on solving the Schrödinger equation in Hylleraas coordinates

for heliumlike atoms

Gordon W.F. Drake

Department of Physics, University of Windsor

Windsor, Ontario, Canada N9B 3P4

(Transcribed from hand-written notes and edited by Lauren Moffatt. Last revised November 22, 2017 to correct a missing subscript in the expression for $B_1$ in the Hamiltonian in Sect. 11 General Hermitian Property.)

Introduction

These notes describe some of the technical details involved in solving the Schrödinger equation for a heliumlike atom of nuclear charge Z in correlated Hylleraas coordinates. The standard for computational purposes is to express all quantities in a form that is valid for electronic states of arbitrary angular momentum, and to express matrix elements of the Hamiltonian in a manifestly Hermitian symmetrized form. Further information and derivations of the mathematical techniques for angular integrals and radial recursion relations can be found in the paper G.W.F. Drake, Phys. Rev. A \({\bf 18}\), 820 (1978).

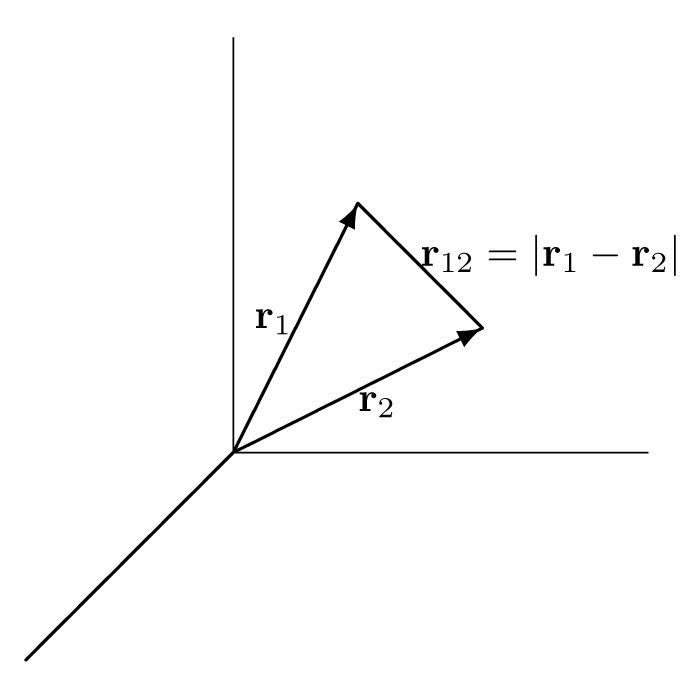

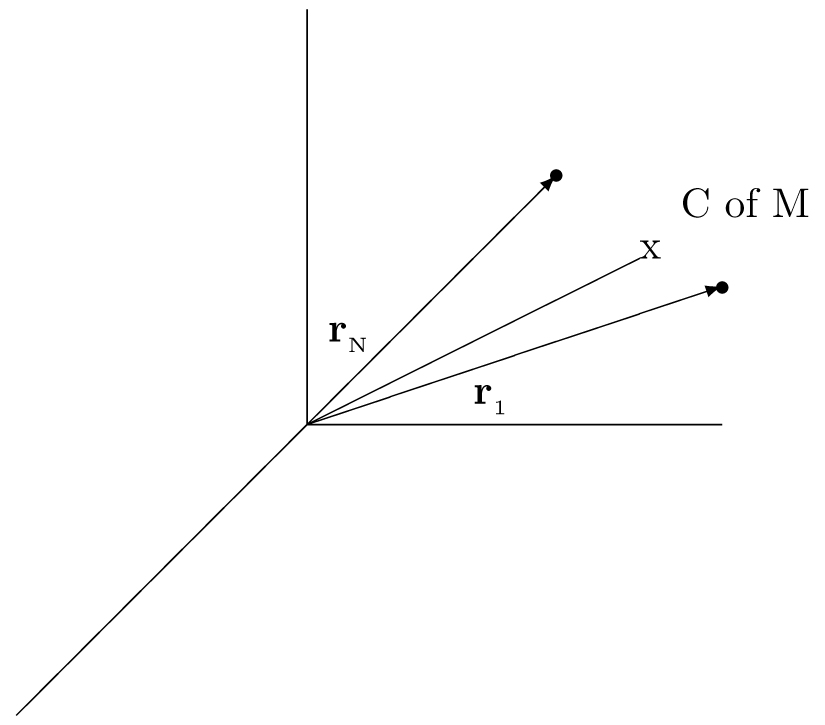

The starting point is the two-electron Schrödinger equation for infinite nuclear mass \begin{equation} \left[-\frac{\hbar^2}{2m}(\nabla^2_1 +\nabla^2_2) - \frac{Ze^2}{r_1} - \frac{Ze^2}{r_2}+\frac{e^2}{r_{12}} \right]\psi = E\psi\nonumber \end{equation} where \(m\) is the electron mass, and \(r_{12} = |{\bf r}_1 - {\bf r}_2|\) (see diagram below).

Begin by rescaling distances and energies so that the Schrödinger equation can be expressed in a dimensionless form. The dimensionless Z-scaled distance is defined by \(\begin{eqnarray} \rho = \frac{Zr}{a_0} \end{eqnarray} \) where \( \begin{eqnarray} a_0 = \frac{\hbar^2}{me^2} \end{eqnarray} \) is the atomic unit (a.u.) of distance equal to the Bohr radius \(0.529\, 177\, 210\, 92(17) \times 10^{-10}\) m. Then

\begin{equation}[-\frac{\hbar^2}{2m}Z^2(\frac{me^2}{\hbar^2})^2(\nabla^2_{\rho_1}+\nabla^2_{\rho_2}) - Z^2\frac{e^2}{a_0}\rho^{-1}_1 - Z^2\frac{e^2}{a_0}\rho^{-1}_2 + \frac{e^2}{a_0}Z\rho^{-1}_{12}]\psi= E\psi\nonumber\end{equation}

But \(\begin{eqnarray} \frac{\hbar^2}{m}(\frac{me^2}{\hbar^2})^2 = \frac{e^2}{a_0} \end{eqnarray} \) is the hartree atomic unit of of energy \((E_h = 27.211\,385\,05(60)\) eV, or equivalently \(E_h/(hc) = 2194.746\,313\,708(11)\) m\(^{-1})\). Therefore, after multiplying through by \(a_0/(Ze)^2\), the problem to be solved in Z-scaled dimensionless units becomes

\begin{equation} \left[-\frac{1}{2}(\nabla^2_{\rho_1}+\nabla^2_{\rho_2}) - \frac{1}{\rho_1} - \frac{1}{\rho_2} + \frac{Z^{-1}}{\rho_{12}}\right]\psi = \varepsilon\psi\nonumber \end{equation}

where \(\begin{eqnarray}\varepsilon = \frac{Ea_0}{(Ze)^2} \end{eqnarray}\) is the energy in Z-scaled atomic units. For convenience, rewrite this in the conventional form \begin{equation} H\psi = \varepsilon\psi\nonumber \end{equation} where (using \(r\) in place of \(\rho\) for the Z-scaled distance) \begin{equation} H = -\frac{1}{2}(\nabla^2_1+\nabla^2_2) - \frac{1}{r_1}-\frac{1}{r_2} + \frac{Z^{-1}}{r_{12}} \nonumber\end{equation} is the atomic Hamiltonian for infinite nuclear mass.

The Hartree Fock Method

For purposes of comparison, and to define the correlation energy, assume that \(\psi({\bf r}_1,{\bf r}_2)\) can be written in the separable product form \begin{equation} \psi({\bf r}_1,{\bf r}_2) = \frac{1}{\sqrt{2}} \left[ u_1(r_1) u_2(r_2) \pm u_2(r_1) u_1(r_2) \right] \nonumber \end{equation} for the \(1s^2\ ^1\!S\) ground state. Because of the \(\frac{1}{r_{12}}\) term in the Schrödinger equation, the exact solution connot be expressed in this form as a separable product. However, the Hartree-Fock approximation corresponds to finding the best possible solution to the Schrödinger equation \begin{equation} H\psi({\bf r}_1,{\bf r}_2) = E\psi({\bf r}_1,{\bf r}_2)\nonumber \end{equation} that can nevertheless be expressed in this separable product form, where as before \begin{equation} H = -\frac{1}{2}(\nabla^2_1+\nabla^2_2) - \frac{1}{r_1}- \frac{1}{r_2} + \frac{Z^{-1}}{r_{12}}\nonumber \end{equation} is the full two-electron Hamiltonian. To find the best solution, substitute into \(\left\langle\psi \lvert H-E \rvert \psi\right\rangle\) and require this expression to be stationary with respect to arbitrary infinitesimal variations \(\delta u_1\) and \(\delta u_2\) in \(u_1\) and \(u_2\) respectively; i.e.

\begin{equation} \frac{1}{2} \left\langle \delta u_1(r_1)u_2(r_2) \pm u_2(r_1)\delta u_1(r_2) \lvert H-E \rvert u_1(r_1) u_2(r_2)\pm u_2(r_1) u_1(r_2)\right\rangle\nonumber \end{equation}

\begin{equation} =\int\delta u_1(r_1) d{\bf r}_1 \left\{ \int d{\bf r}_2 u_2(r_2) \left( H - E \right) \left[ u_1(r_1)u_2(r_2) \pm u_2(r_1) u_1(r_2) \right] \right\}\nonumber \end{equation}

\begin{equation} = 0 \nonumber \end{equation}

for arbitrary \( \delta u_1(r_1).\nonumber \) Therefore \(\{\int d{\bf r}_2 \ldots \} = 0\).

Similarly, the coefficient of \(\delta u_2\) would give

\begin{equation} \int d{\bf r}_1 u_1(r_1) \left( H-E \right) \left[ u_1(r_1) u_2(r_2) \pm u_2(r_1) u_1(r_2) \right] = 0\nonumber \end{equation}

Define

\begin{equation} I_{12} = I_{21} = \int d{\bf r}\, u_1(r)u_2(r), \nonumber \end{equation}

\begin{equation} H_{ij} = \int d{\bf r}\, u_i(r)(-\frac{1}{2}\nabla - \frac{1}{r})u_j(r), \nonumber \end{equation}

\begin{equation} G_{ij}(r) = \int d{\bf r}^\prime u_i(r^\prime)\frac{1}{|{\bf r} - {\bf r}\prime|}u_j(r^\prime)\nonumber \end{equation}

Then the above equations become the pair of integro-differential equations

\begin{equation} [ H_0 - E + H_{22}+G_{22}(r)]u_1(r) = \mp [ I_{12}(H_0-E) + H_{12}+G_{12}(r)]u_2(r)\nonumber \end{equation}

\begin{equation} [H_0-E+H_{11}+G_{11}(r)]u_2(r) = \mp [I_{12}(H_0-E) + H_{12}+G_{12}(r)]u_1(r)\nonumber \end{equation}

These must be solved self-consistently for the "constants" \(I_{12}\) and \(H_{ij}\) and the function \(G_{ij}(r)\).

The Hartree Fock energy is \(E \simeq -2.87\ldots \)a.u. while the exact energy is \(E = -2.903724\ldots \)a.u.

The difference is called the "correlation energy" because it arises from the way in which the motion of one electron is correlated to the other. The Hartree Fock equations only describe how one electron moves in the average field provided by the other (mean-field theory).

Configuration Interaction

Expand \begin{equation} \psi({\bf r}_1,{\bf r}_2)= C_0u^{(s)}_1(r_1)u^{(s)}_1(r_2) + C_1u^{(P)}_1({\bf r}_1)u^{(P)}_1({\bf r}_2){\cal Y}^0_{1,1,0}(\hat{\bf r}_1, \hat{\bf r}_2)+C_2u^{(d)}_1({\bf r}_1)u^{(d)}_2({\bf r}_2){\cal Y}^0_{2,2,0}(\hat{\bf r}_1, \hat{\bf r}_2)+... \pm {\rm\ exchange} \end{equation} where \begin{equation} {\cal Y}^M_{l_1,l_2,L}(\hat{\bf r}_1, \hat{\bf r}_2)=\sum_{m_1,m_2}Y^{m_1}_{l_1}({\bf r}_1)Y^{m_2}_{l_2}({\bf r}_2)\times <l_1l_2m_1m_2\mid LM> \end{equation}

This works, but is slowly convergent, and very laborious. The best CI calculations are accurate to \( ~10^{-7}\) a.u.

Hylleraas Coordinates

[E.A. Hylleraas, Z. Phys. \({\bf 48}, 469(1928)\) and \({\bf 54}, 347(1929)\)] suggested using the co-ordinates \(r_1\), \(r_2\) and \(r_{12}\) or equivalently

\begin{eqnarray} s &=& r_1 + r_2, \nonumber\\ t &=& r_1-r_2, \nonumber\\ u &=& r_{12}\nonumber \end{eqnarray}

and writing the trial functions in the form

\begin{equation} \Psi({\bf r}_1,{\bf r}_2) = \sum^{i+j+k\leq N}_{i,j,k}c_{i,j,k}r_1^{i+l_1}r_2^{j+l_2}r_{12}^ke^{-\alpha r_1 - \beta r_2} \mathcal{Y}^M_{l_1,l_2,L}(\hat{r}_1,\hat{r}_2)\pm \text{exchange}\nonumber \end{equation}

Diagonalizing H in this non-orthogonal basis set is equivalent to solving \( \begin{eqnarray} \frac{\partial E}{\partial c_{i,j,k}} = 0\nonumber \end{eqnarray} \) for fixed \(\alpha\) and \(\beta\).

The diagonalization must be repeated for different values of \(\alpha\) and \(\beta\) in order to optimize the non-linear parameters.

Completeness

The completeness of the above basis set can be shown by first writing \begin{equation} r_{12}^2 = r_1^2 + r_2^2 - 2r_1r_2\cos\theta_{12} \end{equation} and, from the spherical harmonic addition theorem, \begin{equation} \cos(\theta_{12})=\frac{4\pi}{3}\sum^1_{m=-1}Y^{m*}_1(\theta_1,\varphi_1)Y^m_1(\theta_2,\varphi_2) \end{equation} Consider first the S-states. The \(r_{12}^0\) terms are like the ss terms in a CI calculation. The \(r_{12}^2\) terms bring in p-p type contributions, and the higher powers bring in d-d, f-f etc. type terms. In general

\begin{equation} P_l(\cos\theta_{12}) = \frac{4\pi}{2l+1}\sum^l_{m=-l}{Y^{m}_l}^*(\theta_1,\varphi_1)Y^m_l(\theta_2,\varphi_2)\nonumber \end{equation}

For P-states, one would have similarly

\begin{array}{lr} r_{12}^0 & (sp)P\nonumber\\ r_{12}^2 & (pd)P\nonumber\\ r_{12}^4 & (df)P\nonumber\\ \vdots & \vdots\nonumber \end{array}

For D-states

\begin{array}{lr} r_{12}^0 & (sd)D & (pp^\prime)D\nonumber\\ r_{12}^2 & (pf)D & (dd^\prime)D\nonumber\\ r_{12}^4 & (dg)D & (ff^\prime)D\nonumber\\ \vdots & \vdots & \vdots\nonumber \end{array}

In this case, since there are two "lowest-order" couplings to form a D-state, both must be present in the basis set, i.e.

\begin{equation} \Psi(r_2,r_2) = \sum c_{ijk}r_1^ir_2^{j+2}r_{12}^ke^{-\alpha r_1-\beta r_2}\mathcal{Y}^M_{022}(\hat{r}_1,\hat{r}_2) +\sum d_{ijk}r_1^{i+1}r_2^{j+1}r_{12^k}e^{-\alpha^\prime r_1 - \beta^\prime r_2}\mathcal{Y}^M_{112}(\hat{r}_1,\hat{r}_2)\nonumber \end{equation}

For F-states, one would need \((sf)F\) and \((pd)F\) terms.

For G-states, one would need \((sg)G\), \((pf)G\) and \((dd^\prime)G\) terms.

Completeness of the radial functions can be proven by considering the Sturm-Liouville problem

\begin{equation} \left(-\frac{1}{2}\nabla^2-\frac{\lambda}{r_s}-E\right)\psi({\bf r}) = 0\nonumber \end{equation}

or

\begin{equation} \left(-\frac{1}{2}\frac{1}{r^2}\frac{\partial}{\partial r}\left({r^2}\frac{\partial}{\partial r}\right) - \frac{l\left(l+1\right)}{2r^2} - \frac{\lambda}{r} - E\right)u(r) = 0.\nonumber \end{equation}

For fixed E and variable \(\lambda\) (nuclear charge).

The eigenvalues are \(\lambda_n = (E/E_n)^{1/2}\), where \(E_n =- \frac{1}{2n^2}\)

and the eigenfunctions are

\begin{equation} u_{nl}(r) = \frac{1}{(2l+1)!}\left(\frac{(n+l)!}{(n-l-1)2!}\right)^{1/2}(2\alpha)^{3/2}e^{-\alpha r}\nonumber \times \ \left( 2 \alpha r \right)^l\,_{1}F_{1}\left(-n+l+1,2l+2; 2 \alpha r \right) \end{equation}

with \(\alpha = (-2E)^{1/2}\) and \(n\geq l+1\). The confluent hypergeometric function \( _1F_1(a,b,;z)\) then denotes a finite polynomial since \(a=-n+l+1\) is a negative integer or zero.

Unlike the hydrogen spectrum, which has both a discrete part for \(E<0\) and a

continuous part for \(E>0\), this forms an entirely discrete set of finite

polynomials, called Sturmian functions. They are orthogonal with respect to

the potential, i.e.

\begin{equation} \int^\infty_0 r^2dr \left( u_{n^\prime l}(r)\frac{1}{r}u_{nl}(r) \right) = \delta_{n,n^\prime}\nonumber \end{equation}

Since they become complete in the limit \(n\rightarrow\infty\), this assures the

completeness of the variational basis set.

[See also B Klahn and W.A. Bingel Theo. Chim. Acta (Berlin) 44, 9 and 27 (1977)].

Solutions of the Eigenvalue Problem

For convenience, write

\[

\Psi({\bf r}_1,{\bf r}_2) = \sum^N_{m=1}c_m\varphi_m\nonumber

\]

where \(m\) represents the \(m\)'th combination of \(i,j,k\) and

\begin{equation} \varphi_{ijk}=r_1^ir_2^jr_{12}^ke^{-\alpha r_1 - \beta r_2}\mathcal{Y}^M_{l_1,l_2,L}(\hat{r}_1,\hat{r}_2) \pm \mbox{exchange}\nonumber \end{equation}

\begin{align}

&

\left( \begin{array}{lr}

\cos(\theta) & \sin(\theta)\\

-\sin(\theta) & \cos(\theta)

\end{array} \right)

\left( \begin{array}{lr}

H_{11} & H_{12} \\

H_{12} & H_{22}

\end{array}\right)

\left( \begin{array}{lr}

\cos(\theta) & -\sin(\theta)\\

\sin(\theta) & \cos(\theta)

\end{array} \right)

\\[5pt]

= & \left(\begin{array}{lr}

cH_{11}+sH_{12} & cH_{12} + sH_{22}\\

-sH_{11} + cH_{12} & -sH_{12} + cH_{22}

\end{array}\right)

\left( \begin{array}{cc}

c & -s \\

s & c

\end{array}\right)

\\[5pt]

= & \left(\begin{array}{lr}

c^2H_{11}+s^2H_{22} + 2csH_{12} & (c^2-s^2)H_{12}+cs(H_{22}-H_{11})\\

(c^2-s^2)H_{12}+cs(H_{22}-H_{11}) & s^2H_{11}+c^2H_{22}-2csH_{12}

\end{array}\right)

\end{align}

Therefore

\begin{equation} \left[\cos^2(\theta)-\sin^2(\theta)\right]H_{12} = \cos(\theta)\sin(\theta)(H_{11}-H_{22})\nonumber \end{equation}

and

\begin{equation} \tan(2\theta) = \frac{2H_{12}}{H_{11}-H_{22}}\nonumber \end{equation}

i.e.

\begin{equation} \cos(\theta)=\left(\frac{r+\omega}{2r}\right)^{1/2}\nonumber \end{equation}

\begin{equation} \sin(\theta)=-\mbox{sgn}(H_{12})\left(\frac{r-\omega}{2r}\right)^{1/2}\nonumber \end{equation}

where

\begin{equation} \omega = H_{22}-H_{11}\nonumber \end{equation}

\begin{equation} r=\left(\omega^2+4H_{12}^2\right)^{1/2}\nonumber \end{equation}

\begin{equation} E_1=\frac{1}{2}\left(H_{11}+H_{22}-r\right)\nonumber \end{equation}

\begin{equation} E_2=\frac{1}{2}\left(H_{11}+H_{22}+r\right)\nonumber \end{equation}

Brute Force Method

- Gives all the eigenvalues and eigenvectors, but it is slow

- First orthonormalize the \(N\)-dimensional basis set; i.e. form linear combinations

\begin{equation} \Phi_m = \sum_{n=1}^N\varphi_nR_{nm}\nonumber \end{equation}

such that \begin{equation} \langle \Phi_m | \Phi_n \rangle = \delta_{m,n} \end{equation}

This can be done by finding an orthogonal tranformation T such that

\begin{equation} T^TOT = I = \left( \begin{array}{ccccc} I_1 & 0 & 0 & \ldots & 0 \\ 0 & I_2 & 0 & \ldots & 0 \\ 0 & 0 & I_3 & \ldots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \ldots & I_N \end{array}\right); \end{equation}

\begin{equation} O_{mn} = \langle \varphi_m | \varphi_n \rangle \nonumber \end{equation}

and then applying a scale change matrix

\begin{equation} S = \left(\begin{array}{ccccc} \frac{1}{I_1^{1/2}} & 0 & 0 & \ldots & 0\\ 0 & \frac{1}{I_2^{1/2}} & 0 & \ldots & 0 \\ 0 & 0 & \frac{1}{I_3^{1/2}} & \ldots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \ldots & I_N^{1/2} \end{array}\right)= S^T\nonumber \end{equation}

Then

\begin{equation} S^TT^TOTS = 1 \end{equation}

i.e.

\begin{equation} R^TOR = 1 \end{equation}

with \(R=TS\).

If H is the matrix with elements \(H_{mn} = \langle \varphi_m | \varphi_n \rangle \), then H expressed in the \(\Phi_m\) basis set is

\begin{equation} H^\prime = R^THR.\nonumber \end{equation}

We next diagonalize \(H^\prime\) by finding an orthogonal transformation W such that

\begin{equation} W^T H^\prime W = \lambda = \left( \begin{array}{cccc} \lambda_1 & 0 & \ldots & 0 \\ 0 & \lambda_2 & \ldots & 0 \\ \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & \ldots & \lambda_N \end{array}\right)\nonumber \end{equation}

The q'th eigenvector is

\begin{equation} \Psi^{(q)} = \sum_{n=1}^N\Phi_n W_{n,q} = \sum_{n,n^\prime} \varphi_{n^\prime} R_{n^\prime ,n} W_{n,q} \nonumber \end{equation}

i.e.

\begin{equation} c_{n^\prime}^{(q)} = \sum_{n=1}^N R_{n^\prime n} W_{n,q} \end{equation}

The Power Method

- Based on the observation that if H has one eigenvalue, \(\lambda_M\), much bigger than all the rest, and \(\chi = \left( \begin{array}{c}a_1\\a_2\\\vdots\end{array}\right)\) is an arbitrary starting vector, then \(\chi = \sum_{q=1}^N x_q\Psi^{(q)}\).

\begin{equation} (H)^n\chi = \sum_{q=1}^N x_q \lambda^n_q\Psi^{(q)}\nonumber\rightarrow x_M\lambda_M^n\Psi^{(M)}\nonumber \end{equation}

provided \(x_M\neq 0\).

To pick out the eigenvector corresponding to any eigenvalue, write the original problem in the form

\begin{align} H\Psi & = \lambda O \Psi \nonumber \\ \left( H - \lambda_q O \right) \Psi & = \left( \lambda - \lambda_q \right) O \Psi \nonumber \end{align}

Therefore,

\begin{equation} G \Psi = \frac{1}{\lambda - \lambda_q } \Psi \nonumber \\ \end{equation}

where \(G=(H-\lambda_qO)^{-1}O\) with eigenvalues \(\frac{1}{\lambda_n - \lambda_q}\).

By picking \(\lambda_q\) close to any one of the \(\lambda_n\), say \(\lambda_{n^\prime}\), then \(\frac{1}{\lambda_n-\lambda_q}\) is much larger for \(n=n^\prime\) than for any other value. The sequence is then

\begin{array}{c} \chi_1=G\chi\nonumber\\ \chi_2=G\chi_1\nonumber\\ \chi_3=G\chi_2\nonumber\\ \vdots\nonumber \end{array}

until the ratios of components in \(\chi_n\) stop changing.

- To avoid matrix inversion and multiplication, note that the sequence is equivalent to \begin{equation} F \chi_n = \left( \lambda - \lambda_q \right) O \chi_{n-1} \nonumber \\ \end{equation} where \(F = H-\lambda_qO\). The factor of \(\left( \lambda - \lambda_q \right)\) can be dropped because this only affects the normalization of \(\chi_n\). To find \(\chi_n\), solve \begin{equation} F \chi_n = O \chi_{n-1} \nonumber \end{equation} (N equations in N unknowns). Then \begin{equation} \lambda = \frac{ \langle \chi_n | H | \chi_n \rangle}{\langle \chi_n | \chi_n \rangle} \nonumber \end{equation}

Matrix Elements of H

\begin{equation} H=-\frac{1}{2}\nabla^2_1 -\frac{1}{2}\nabla^2_2 - \frac{1}{r_1} - \frac{1}{r_2} +\frac{Z^{-1}}{r_{12}}\nonumber \end{equation}

Taking \(r_1, r_2\) and \(r_{12}\) as independent variables,

\begin{equation} \nabla_1^2 = \frac{1}{r_1^2}\frac{\partial}{\partial r_1}\left(r_1^2\frac{\partial}{\partial r_1}\right)+ \frac{1}{r_{12}^2}\frac{\partial}{\partial r_{12}} \left(r_{12}^2\frac{\partial}{\partial r_{12}}\right)\nonumber -\frac{l_1(l_1+1)}{r_1^2}+2(r_1 - r_2\cos(\theta_{12}))\frac{1}{r_{12}}\frac{\partial^2}{\partial r_1 \partial r_{12}} \nonumber - 2(\nabla_1^Y \cdot {\bf r}_2)\frac{1}{r_{12}}\frac{\partial}{\partial r_{12}}\nonumber \end{equation}

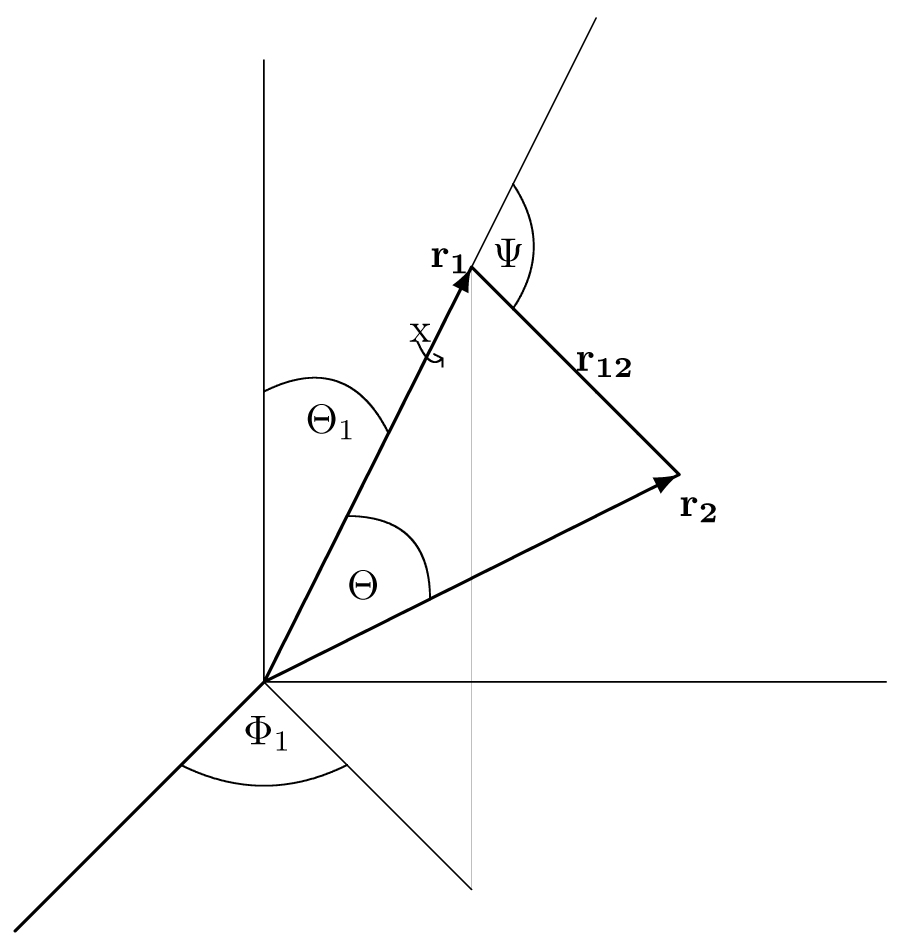

where \(\nabla_1^Y\) acts only on the spherical harmonic part of the wave function and the diagram

defines the complete set of 6 independent variables is \(r_1\), \(r_2\), \(r_{12}\), \(\theta_1\), \(\varphi_1\), \(\chi\).

If \(r_{12}\) were not an independent variable, then one could take the volume element to be

\begin{equation} d\tau = r_1^2dr_1\sin(\theta_1)d\theta_1d\varphi_1r_2^2dr_2\sin(\theta_2)d\theta_2d\varphi_2.\nonumber \end{equation}

However, \(\theta_2\) and \(\varphi_2\) are no longer independent variables. To eliminate them, take the point \({\bf r}_1\) as the origin of a new polar co-ordinate system, and write

\begin{equation} d\tau=-r_1^2dr_1\sin(\theta_1)d\theta_1d\varphi_1r_{12}^2dr_{12}\sin(\psi)d\psi d\chi\nonumber \end{equation}

and use

\begin{equation} r_2^2=r_1^2+r_{12}^2 +2r_1r_{12}\cos(\psi) \end{equation}

Then for fixed \(r_1\) and \(r_{12}\),

\begin{equation} 2r_2dr_2 = -2r_1r_{12}\sin(\psi)d\psi \end{equation}

Thus

\begin{equation} d\tau= r_1dr_1r_2dr_2r_{12}dr_{12}\sin(\theta_1)d\theta_1d\varphi_1d\chi \end{equation}

The basic type of integral to be calculated is

\begin{align} I \left( l_1, m_1, l_2, m_2; R \right) & = \int \sin \left( \theta_1 \right) d\theta_1 d\varphi_1 d\chi Y^{m_1}_{l_1} \left( \theta_1, \varphi_1 \right)^{*} Y^{m_2}_{l_2} \left( \theta_2, \varphi_2 \right) \\ & \times \int r_1dr_1r_2dr_2r_{12}dr_{12}R \left( r_1, r_2, r_{12} \right) \end{align}

Consider first the angular integral. \(Y^{m_2}_{l_2} \left( \theta_2, \varphi_2 \right)\) can be expressed in terms of the independent variables \(\theta_1, \varphi_1, \chi\) by use of the rotation matrix relation \begin{equation} Y^{m_2}_{l_2}( \theta_2, \varphi_2) = \sum_m \mathcal{D}^{(l_2)}_{m_2,m}(\varphi_1,\theta_1,\chi)^*Y^m_{l_2}( \theta_{12}, \varphi) \end{equation} where \(\theta, \varphi\) are the polar angles of \({\bf r}_2\) relative to \({\bf r}_1\). The angular integral is then

\begin{align} I_{ang} & = \int^{2 \pi}_{0} d\chi \int^{2\pi}_{0} d\varphi_1 \int^{\pi}_{0} \sin \left( \theta_1 \right) d\theta_{1} Y^{m_1}_{l_1} \left( \theta_1, \varphi_1 \right)^{*} \\ & \times \sum_m \mathcal{D}^{(l_2)}_{m_2,m} \left( \varphi_1,\theta_1,\chi \right)^{*} Y^{m}_{l_2} \left( \theta_{12},\varphi \right) \\ \end{align}

Use

\begin{equation} Y^{m_1}_{l_1} \left( \theta_1, \varphi_1 \right)^{*} = \sqrt{\frac{2l_1+1}{4\pi}}\mathcal{D}^{(l_1)}_{m_1,0} \left( \varphi_1, \theta_1, \chi \right) \end{equation}

together with the orthogonality property of the rotation matrices (Brink and Satchler, p 147)

\begin{align} & \int \mathcal{D}^{(j)*}_{m,m^\prime}\mathcal{D}^{(J)}_{M,M^\prime}\sin(\theta_1)d\theta_1d\varphi_1d\chi \\ & = \frac{8\pi^2}{2j+1}\delta_{jJ}\delta_{mM}\delta_{m^\prime M^\prime}\nonumber \end{align}

to obtain

\begin{align} I_{ang} & = \sqrt{ \frac{2l_1+1}{4\pi}} \frac{8\pi^2}{2l_1+1} \delta_{l_1,l_2} \delta_{m_1,m_2} Y^{0}_{l_2} \left( \theta_{12}, \varphi \right) \\ & = 2 \pi \delta_{l_1,l_2} \delta_{m_1,m_2} P_{l_2} \left( \cos \theta_{12} \right) \end{align}

since

\begin{equation} Y^0_{l_2} \left( \theta_{12}, \varphi \right) = \sqrt{\frac{2l_1+1}{4\pi}} P_{l_2} \left( \cos \left( \theta_{12} \right) \right) \end{equation}

Note that \(P_{l_2} \left( \cos \theta \right)\) is just a short hand expression for a radial function because

\begin{equation} \cos \theta_{12} = \frac{r_1^2 + r_2^2 - r_{12}^2}{2r_1r_2} \end{equation}

The original integral is thus

\begin{equation} I \left( l_1, m_1, l_2, m_2; R \right) = 2 \pi \delta_{l_1,l_2} \delta_{m_1,m_2} \int^{\infty}_{0} r_{1} dr_{1} \int^{\infty}_{0} r_{2} dr_{2} \int^{r_{1} + r_{2}}_{|r_{1} - r_{2}|} r_{12} dr_{12} R \left( r_{1}, r_{2}, r_{12} \right) P_{l_2} \left( \cos \theta_{12} \right) \end{equation}

where again

\begin{equation} \cos \theta_{12} = \frac{r_{1}^{2} +r^{2}_{2} - r_{12}^{2}}{2 r_{1} r_{2}} \end{equation}

is a purely radial function.

The above would become quite complicated for large \(l_2\) because \(P_{l_2}(\cos \theta_{12})\) contains terms up to \(\left( \cos \theta_{12} \right)^{l_2}\). However, recursion relations exist which allow any integral containing \(P_l \left( \cos \theta_{12} \right)\) in terms of those containing just \(P_0 \left( \cos \theta_{12} \right) = 1\) and \(P_1 \left( \cos \theta_{12} \right) = \cos \theta_{12}\).

Radial Integrals and Recursion Relations

The basic radial integral is [see G.W.F. Drake, Phys. Rev. A 18, 820 (1978)]

\begin{align} I_0 \left( a, b, c \right) & = \int^{\infty}_{0} r_{1} dr_{1} \int^{\infty}_{r_{1}} r_{2} dr_{2} \int^{r_{1}+r_{2}}_{r_{2} - r_{1}} r_{12} dr_{12} r_{1}^{a} r_{2}^{b} r_{12}^{c} e^{-\alpha r_{1} - \beta r_{2}} + \int^{\infty}_{0} r_{2} dr_{2} \int^{\infty}_{r_{2}} r_{1} dr_{1} \int^{r_{1} + r_{2}}_{r_{1} - r_{2}} r_{12} dr_{12} r_{1}^{a} r_{2}^{b} r_{12}^{c} e^{- \alpha r_{1} - \beta r_{2}} \\ & = \frac{2}{c+2} \sum^{[(c+1)/2]}_{i=0} \left(\begin{array}{c} c+2 \\ 2i+1 \end{array} \right) \left\{ \frac{q!}{\beta^{q+1} \left( \alpha + \beta \right)^{p+1}} \sum_{j=0}^{q} \frac{\left( p + j \right)!}{j!} \left( \frac{\beta}{\alpha + \beta} \right)^j + \frac{q^{\prime}!}{\alpha^{q^{\prime}+1} \left( \alpha + \beta \right)^{p^{\prime}+1}} \sum^{q^{\prime}}_{j=0} \frac{\left( p^{\prime} + j \right)!}{j!} \left( \frac{\alpha}{\alpha + \beta} \right)^j \right\} \end{align}

where

\begin{array}{ll} p = a + 2i + 2 & p^{\prime} = b + 2i + 2 \\ q = b + c - 2i + 2 & q^{\prime} = a + c - 2i + 2 \end{array}

The above applies for \( a, b \geq -2, c\geq -1\). \([x]\) means "greatest integer in" \(x\).

Then

\begin{align} I_1 \left( a, b, c \right) & = \int d\tau_{r} r_{1}^{a} r_{2}^{b} r_{12}^{c} e^{ -\alpha r_{1} - \beta r_{2}} P_{1}\left( \cos \theta \right) \\ & = \frac{1}{2} \left( I_{0} \left( a + 1, b - 1, c \right) + I_{0} \left( a - 1, b + 1, c \right) - I_{0} \left( a - 1, b - 1, c + 2 \right) \right) \end{align}

The Radial Recursion Relation

Recall that the full integral is

\begin{equation} I(l_1m_1,l_2m_2;R)=2\pi\delta_{l_1,l_2}\delta_{m_1,m_2}I_{l_2}(R) \end{equation}

where

\begin{equation} I_{l_2}(R) = \int d\tau_r R(r_1,r_2,r_{12})P_{l_2}(\cos \theta_{12}) \end{equation}

To obtain the recursion relation, use

\begin{equation} P_l(x) = \frac{[P^{\prime}_{l+1}(x)-P^{\prime}_{l-1}(x)]}{2l+1} \end{equation}

with

\begin{equation} P_{l+1}^\prime (x) = \frac{d}{dx}P_{l+1}(x) \end{equation}

Here \(x=\cos\theta_{12}\) and

\begin{align} \frac{d}{d \cos \theta_{12}} & = \frac{dr_{12}}{d \cos \theta_{12}} \frac{d}{dr_{12}} \\ & = -\frac{r_{1} r_{2}}{r_{12}} \frac{d}{dr_{12}} \end{align}

Then,

\begin{equation} I_{l}\left( R \right) = - \int d\tau_r R \frac{r_{1} r_{2}}{r_{12}} \frac{d}{dr_{12}} \frac{ \left[ P_{l+1} \left( \cos \theta_{12} \right) - P_{l-1} \left( \cos \theta_{12} \right) \right]}{2l+1} \end{equation}

The \(r_{12}\) part of the integral is

\begin{align} & \int^{r_{1} + r_{2}}_{|r_{1} - r_{2}|} r_{12} dr_{12} R \frac{r_{1} r_{2}}{r_{12}} \frac{d}{dr_{12}} \left[P_{l+1}-P_{l-1} \right] \\ & = R r_{1} r_{2} \left[ P_{l+1} - P_{l-1} \right]^{r_{1} + r_{2}}_{|r_{1} - r_{2}|} - \int^{r_{1} + r_{2}}_{|r_{1} - r_{2}|} r_{12} dr_{12} \left( \frac{d}{dr_{12}} R \right) \frac{r_{1} r_{2}}{r_{12}} \frac{ \left[ P_{l+1} - P_{l-1} \right]}{2l+1} \end{align}

The integrated term vanishes because

\begin{equation} \cos \theta_{12} = \frac{r_{1}^{2} + r_{2}^{2} - r_{12}^{2}}{2 r_{1} r_{2}} \begin{array}{lll} & = -1 & {\rm when} & r_{12}^{2} = \left( r_{1} + r_{2} \right)^{2} \\ & = 1 & {\rm when} & r_{12}^{2} = \left( r_{1} - r_{2} \right)^{2} \end{array} \end{equation}

and \(P_l(1)=1\), \(P_{l}(-1)=(-1)^{l}\).

Thus,

\begin{equation} I_{l+1}\left(\frac{r_1r_2}{r_{12}}R^\prime\right) = \left( 2l+1 \right) I_{l}(R) - I_{l-1} \left( \frac{r_{1} r_{2}}{r_{12}} R^{\prime} \right) \end{equation}

If

\begin{equation} R = r_{1}^{a-1} r_{2}^{b-1} r_{12}^{c+2} e^{-\alpha r_{1} - \beta r_{2}} \end{equation}

then [G.W.F. Drake, Phys. Rev. A 18, 820 (1978)]

\begin{equation} I_{l+1}(r_1^ar_2^br_{12}^c) = \frac{2l+1}{c+2} I_{l} \left( r_{1}^{a-1} r_{2}^{b-2} r_{12}^{c+2} \right)+I_{l-1} \left( r_{1}^{a} r_{2}^{b} r_{12}^{c} \right) \end{equation}

For the case \(c=-2\), take

\begin{equation} R = r_{1}^{a-1} r_{2}^{b-1} \ln r_{12} e^{ -\alpha r_{1} -\beta r_{2}} \end{equation}

Then

\begin{equation} I_{l+1} \left( r_{1}^{a} r_{2}^{b} r_{12}^{c} \right) = I_{l} \left( r_{1}^{a-1} r_{2}^{b-1} \ln r_{12} \right) \left( 2l + 1 \right) - I_{l-1} \left( r_{1}^{a} r_{2}^{b} r_{12}^{-2} \right) \end{equation}

This allows all \(I_{l}\) integrals to be calculated from tables of \(I_0\) and \(I_1\) integrals.

The General Integral

The above results for the angular and radial integrals can now be combined into a general formula for integrals of the type

\begin{equation} I = \int \int d{\bf r}_{1} d{\bf r}_{2} R_{1} \mathcal{Y}^{M^{\prime}}_{l^{\prime}_{1} l^{\prime}_{2} L^{\prime}} \left( \hat{r}_{1}, \hat{r}_{2} \right) T_{k_{1} k_{2} K}^{Q} \left( {\bf r}_{1},{\bf r}_{2} \right) R_{2} \mathcal{Y}_{l_{1} l_{2} L}^{M} \left( \hat{r}_{1}, \hat{r}_{2} \right) \end{equation}

where

\begin{equation} \mathcal{Y}^{M}_{l_{1} l_{2} L} \left( \hat{r}_{1}, \hat{r}_{2} \right) = \sum_{m_1,m_2} \langle l_{1} l_{2} m_{1} m_{2} | LM \rangle Y^{m_{1}}_{l_{1}} \left( \hat{r}_{1} \right) Y^{m_{2}}_{l_{2}} \left( \hat{r}_{2} \right) \end{equation}

and

\begin{equation} T^Q_{k_{1} k_{2} K} \left( {\bf r}_{1}, {\bf r}_{2} \right) = \sum_{q_{1}, q_{2}} \langle k_{1} k_{2} q_{1} q_{2} | KQ \rangle Y_{k_{1}}^{q_{1}} \left( \hat{r}_{1} \right) Y^{q_{2}}_{k_{2}} \left( \hat{r}_{2} \right) \end{equation}

The basic idea is to make repeated use of the formula

\begin{equation} Y^{m_{1}}_{l_{1}} \left( \hat{r}_{1} \right) Y^{m_{2}}_{l_{2}} \left( \hat{r}_{1} \right) = \sum_{l m} \left( \frac{\left( 2l_{1}+1 \right) \left(2l_{2}+1 \right) \left(2l+1 \right)}{4 \pi} \right)^{1/2} \times \left( \begin{array}{ccc} l_1 & l_2 & l \\ m_1 & m_2 & m \end{array} \right) \left(\begin{array}{ccc} l_1 & l_2 & l \\ 0 & 0 & 0 \end{array} \right) Y^{m*}_{l} \left( \hat{r}_{1} \right) \end{equation}

where

\begin{equation} Y^{m*}(\hat{r}) = (-1)^mY_l^{-m}(\hat{r}) \end{equation}

and

\begin{equation} \left(\begin{array}{ccc} l_1 & l_2 & l \\ m_1 & m_2 & m \end{array} \right) = \frac{\left( -1 \right)^{l_{1} - l_{2} - m}}{\left( 2l + 1 \right)^{1/2}} \left( l_{1} l_{2} m_{1} m_{2} | l, -m \right) \end{equation}

is a 3-\(j\) symbol. In particular, write

\begin{array}{cc} Y^{m_1^\prime}_{l_1^\prime}(\hat{r}_1)^{*} \underbrace{Y_{k_{1}}^{q_{1}} \left( \hat{r}_{1} \right) Y^{m_{1}}_{l_{1}} \left( \hat{r}_{1} \right)} & = & \sum_{\Lambda M}(\ldots)Y^{M}_\Lambda(\hat{r}_1)\\ \ \ \ \ \ \ \ \sum_{\lambda_1\mu_1}Y_{\lambda_1}^{\mu_1}(\hat{r}_1) & & \\ & & \\ Y^{m_{2}^{\prime}}_{l_{2}^{\prime}} \left( \hat{r}_{2} \right) \underbrace{Y^{q_{2}}_{k_{2}} \left( \hat{r}_{2} \right) Y_{l_{2}}^{m_{2}} \left( \hat{r}_{2} \right)} & = & \sum_{\Lambda^{\prime} M^{\prime}} \left( \ldots \right)Y_{\Lambda^{\prime}}^{M^{\prime}*} \left( \hat{r}_{2} \right) \\ \ \ \ \ \ \ \ \ \ \sum_{\lambda_{2} \mu_{2}}Y_{\lambda_{2}}^{\mu_{2}} \left( \hat{r}_{2} \right) & & \end{array}

The angular integral then gives a factor of \(2 \pi \delta_{\Lambda, \Lambda^{\prime}} \delta_{M, M^{\prime}} P_{\Lambda} \left( \cos \theta_{12} \right)\). The total integral therefore reduces to the form

\begin{equation} I = \sum_{\Lambda} C_{\Lambda} I_{\Lambda} \left( R_{1} R_{2} \right) \end{equation}

where \(C_{\Lambda} = \sum_{\lambda_{1}, \lambda_{2}} C_{ \lambda_{1}, \lambda_{2}, \Lambda}\). For further details and derivations, including graphical representations, see G.W.F. Drake, Phys. Rev. A \({\bf 18}\), 820 (1978).

Graphical Representation

Matrix Elements of H

Recall that

\begin{equation} H = -\frac{1}{2} \nabla^{2}_{1} - \frac{1}{2} \nabla^{2}_{2} - \frac{1}{r_1} - \frac{1}{r_2} + \frac{Z^{-1}}{r_{12}} \end{equation}

Consider matrix elements of

\begin{equation} \nabla^2_1 = \frac{1}{r_1^2} \frac{\partial}{\partial r_1}\left(r_1^2\frac{\partial}{\partial r_1} \right) + \frac{1}{r^2}\frac{\partial}{\partial r}\left(r^2\frac{\partial}{\partial r}\right) - \frac{(\vec{l}_1^Y)^2}{r_1^2} + \frac{2 \left( r_1 - r_2 \cos \theta \right)}{r} \frac{\partial^2}{\partial r_1 \partial r} - 2 \left( \nabla^Y_1 \cdot {\bf r}_2 \right) \frac{1}{r} \frac{\partial}{\partial r} \end{equation}

where \(r \equiv r_{12}\) and \(\cos\theta \equiv \cos\theta_{12}\). Also in what follows, define \(\hat{\nabla}^Y_1 \equiv r_1\nabla^Y_1\) where \(\nabla^Y\) operates only on the spherical harmonic part of the wave function, and similarly for \(\vec{l}_1^Y\).

A general matrix element is

\begin{equation} \langle r_1^{a^\prime} r_2^{b^\prime} r_{12}^{c^\prime} e^{-\alpha^\prime r_1 - \beta^\prime r_2} \mathcal{Y}^{M^\prime}_{l^\prime_1 l^\prime_2 L^\prime} \left( \hat{r}_1, \hat{r}_2 \right) \left| \nabla^2_1 \right| r_1^a r_2^b r_{12}^c e^{-\alpha r_1 - \beta r_2} \mathcal{Y}^M_{l_1 l_2 L} \left( \hat{r}_1, \hat{r}_2 \right) \rangle \end{equation}

Since \(\nabla^2_1\) is rotationally invariant, this vanishes unless \(L=L^\prime\), \(M=M^\prime\). Also \(\nabla_1^2\) is Hermitian, so that the result must be the same whether it operates to the right or left, even though the results look very different. In fact, requiring the results to be the same yields some interesting and useful integral identities as follows:

Put

\begin{equation} \mathcal{F} = F \mathcal{Y}^M_{l_1 l_2 L} \left( \hat{r}_1, \hat{r_2} \right) \end{equation}

and

\begin{equation} F = r_1^a r_2^b r_{12}^c e^{-\alpha r_1 - \beta r_2} \end{equation}

Then

\begin{equation} \nabla^2_1 \mathcal{F} = \left\{ \frac{1}{r_1^2} \left[ a \left( a + 1 \right) - l_1 \left( l_1 + 1 \right) \right] + \frac{c \left( c + 1 \right)}{r^2} + \alpha^2 + \frac{2\alpha \left( a + 1 \right)}{r_1} + \frac{2 \left(r_1 - r_2 \cos \theta \right) }{r_1r^2}c \left( a - \alpha r_1 \right) - \frac{2c}{r^2} \left( \hat{\nabla}^Y_1 \cdot \hat{r}_2 \right) \frac{r_2}{r_1} \right\} \mathcal{F} \end{equation}

and

\begin{align} \langle \mathcal{F}^\prime \left| \nabla^2_1 \right| \mathcal{F} \rangle & = \sum_\Lambda \int d\tau_r F^\prime C_\Lambda \left( 1 \right) P_\Lambda \left( \cos \theta \right) \times \left\{ \frac{1}{r_1^2} \left[ a \left( a + 1 \right) - l_1 \left( l_1 + 1 \right) \right] -\frac{2 \alpha \left( a + 1 \right)}{r_1} + \frac{c \left( c + 1 \right)}{r^2} +\alpha^2 + \frac{2 \left( r_2 - r_2 \cos \theta \right)}{r_1r^2}c \left( a - \alpha r_1 \right) \right\} F \\ & + \sum_\Lambda \int d\tau_r F^\prime C_\Lambda \left( \hat{\nabla}^Y_1 \cdot \hat{r}_2 \right) P_\Lambda \left( \cos \theta \right) \left( \frac{-2c r_2}{r_1 r^2} \right) F \end{align}

where

\begin{equation} \int d\tau_r = \int^\infty_0 r_1 dr_1 \int^\infty_0 r_2 dr_2 \int^{r_1+r_2}_{\left| r_1 - r_2 \right|} r dr \end{equation}

For brevity, let the sum over \(\Lambda\) and the radial integrations be understood, and let \(\left( \nabla_1^2 \right)\) stand for the terms which appear in the integrand. Then operating to the right gives

\begin{equation} (\nabla_1^2)_R = \frac{1}{r_1^2} \left[ a \left( a + 1 \right) - l_1 \left( l_1 + 1 \right) \right] + \frac{c \left( c + 1 \right)}{r^2} + \alpha^2 - \frac{2 \alpha \left( a + 1 \right)}{r_1} + \frac{2 \left( r_1 - r_2 \cos \theta \right)}{r_1 r^2}c \left( a - \alpha r_1 \right) - \frac{2c r_2}{r_1 r^2} \left( \hat{\nabla}^Y_1 \cdot \hat{r}_2 \right) \end{equation}

Operating to the left gives

\begin{equation} (\nabla_1^2)_L = \frac{1}{r_1^2} \left[a^\prime \left( a^\prime + 1 \right) - l_1^\prime \left( l_1^\prime + 1 \right) \right] + \frac{c^\prime \left( c^\prime + 1 \right)}{r^2} + \alpha^{\prime 2} -\frac{2 \alpha^\prime \left( a^\prime + 1 \right)}{r_1} + \frac{2 \left( r_1 - r_2 \cos \theta \right)}{r_1 r^2} c^\prime \left( a^\prime - \alpha^\prime r_1 \right) - \frac{2 c^\prime r_2}{r_1 r^2} \left( \hat{\nabla}^{Y^\prime}_1 \cdot \hat{r}_2 \right) \end{equation}

Now put

\begin{array}{cc} a_+ = a+a^\prime, & \hat{\nabla}_1^+ = \hat{\nabla}_1^Y + \hat{\nabla}_1^{Y\prime} \\ a_{-} = a-a^\prime, & \hat{\nabla}_1^{-} = \hat{\nabla}_1^Y - \hat{\nabla}_1^{Y\prime} \end{array}

etc., and substitute \(a^\prime = a_+ - a\), \(c^\prime = c_+ - c\), and \(\alpha^\prime = \alpha_+ - \alpha\) in \((\nabla^2_1)_L\). If \(a_+\), \(c_+\) and \(\alpha_+\) are held fixed, then the equation

\begin{equation} (\nabla^2_1)_R = (\nabla^2_1)_L \end{equation}